The Journey into Automated Testing in Pega Constellation

"Quality is never an accident. It is always the result of intelligent effort."

- John Ruskin

Introduction: The Journey into Automated Testing in Pega Constellation

Automated testing is key to delivering high-quality applications efficiently. This article kicks off a series on how test automation works in Pega Constellation - starting from the basics and leading all the way to real-life business benefits. Whether you're new to testing or have experience with traditional web applications, this guide will provide a structured, hands-on approach to automating Pega applications effectively.

What you’ll learn

By the end of this series, you'll be able to:

- Understand how testing in Pega Constellation differs from traditional applications.

- Choose the right tools for UI and API testing.

- Set up an automated testing environment.

- Compare different testing tools and choose the best for your use case

- Run and expand automated tests efficiently.

- Scale automation for enterprise-level CI/CD and regression testing.

- Easily generate and manage test data.

Why these matters

We all understand the importance of testing - or perhaps we’ve experienced firsthand what happens when software isn’t tested properly. Last-minute bug fixes, unexpected crashes, frustrated users, and endless rework are common pain points. Without a solid testing strategy, small issues can turn into major roadblocks, delaying releases and driving up costs.

This article brings everything together in one place - the key ideas, best practices, and a clear picture of where testing fits into the SDLC. It serves as a concise, structured summary of the most important concepts, making it easier to grasp the essentials without getting lost in unnecessary complexity.

While we may already recognize the value of testing, not everyone in our organizations does - and even within teams, priorities often shift. This is an opportunity to refresh and structure our knowledge, ensuring we’re confident in what to automate, what to test manually, and where to focus efforts.

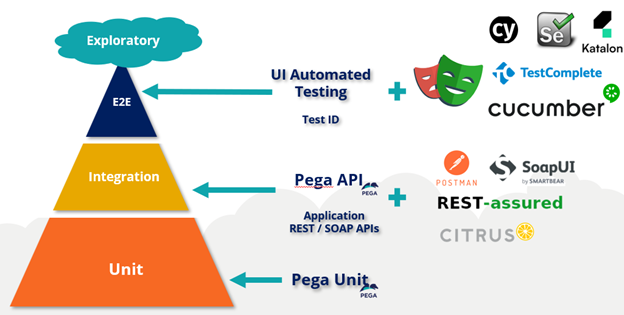

If we ever need to make a case for automated testing to our teams or stakeholders, this article will provide the key arguments to support that conversation. We’ll also introduce the testing pyramid, helping us strike the right balance between different types of tests and highlighting where UI automation, especially in Pega Constellation, fits into the bigger picture.

🎬 Automated Testing in action

Before diving into the details, let’s see automation in action! Watch this quick demo of how a Pega Constellation application can be tested automatically - validating workflows, UI, and APIs seamlessly to identify issues early.

The video shows how automation simplifies testing, speeds up validation, and detects issues early. But how does this translate into real-world savings? Let’s break it down.

The true cost of poor software quality

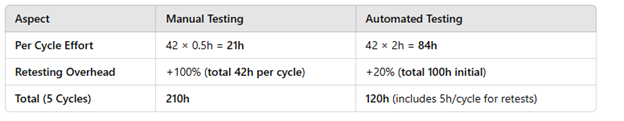

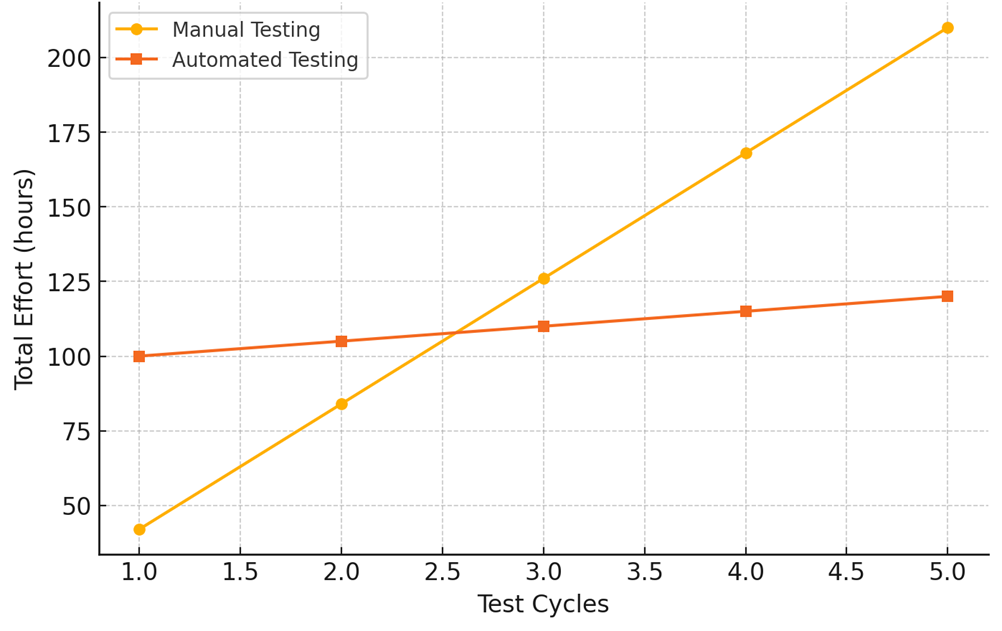

To quantify the impact, we modeled a process with one case type consisting of 7 stages and 14 screens, with each screen having 3 test scenarios - totaling 42 test cases. Below is the effort breakdown per test cycle, including retesting overhead:

Scenario Setup:

- Case Type: 7 stages × 2 screens = 14 screens

- Test Scenarios: 3 per screen → 42 scenarios

The results are clear:

Ensuring software quality isn’t just about avoiding defects - it’s about protecting time, money, and reputation. Delayed testing leads to massive financial and operational losses, and real-world failures prove just how devastating poor software can be.

From finance to healthcare and transportation, even a brief outage can halt transactions, delay critical medical procedures, or ground entire fleets - triggering widespread disruption and severe financial consequences. The numbers speak for themselves:

- $2.42 trillion – Estimated U.S. losses due to poor software quality (CISQ, 2022).

- NASA Mars Climate Orbiter (1999) – $125M Lost – A simple unit conversion error caused total mission failure.

- Facebook 14-Hour Outage (2019) – $90M Lost – A misconfiguration disrupted Facebook, WhatsApp, and Instagram globally.

- 2024 CrowdStrike Global IT Failure – Billions Lost – A faulty security update crippled airlines, banks, and hospitals.

- Google Cloud Failure (2020) – $1.2M Lost per hour – Disrupted Gmail, YouTube, and Drive, leading to major operational setbacks.

Why do teams avoid it?

Despite its importance, many teams still hesitate to invest in testing. Why? Here are some common reasons – can you recognize some of them in your team?

|

Why Don’t We Test? |

Why do we test? |

|---|---|

|

🚫 “Testing is tedious.” → But fixing production issues is worse. |

✅Saves money - Fixing bugs early is cheaper than fixing them in production. |

|

🚫 “It takes too much time.” → Yet skipping tests leads to costly defects. |

✅Prevention - Catches issues before they cause major failures. |

|

🚫 “Developers prefer coding over testing.” → But a broken release means rework. |

✅Quality & security - Ensures compliance and prevents cyber threats. |

|

🚫 “Testing is outsourced.” → But relying on clients for QA is risky. |

✅Performance - Guarantees stability under different loads. |

|

✅Customer satisfaction - Higher reliability means happier users. |

When and what to test?

Software testing ensures quality by detecting defects early. It spans functional testing (checking if features work as expected) and non-functional testing (assessing performance, security, and reliability).

Take a moment to reflect - how much testing and quality assurance actually happens in your daily practice? - This brief breakdown isn’t just an overview; it’s a chance for a reality check. Are all these aspects truly covered in your projects, or do some fall through the cracks?

We’d love to hear your thoughts! Share your experiences, challenges, or best practices in the comments. Did you score at least 3 points from the list?

|

Types of Functional Testing |

Types of Non-Functional Testing |

|---|---|

|

Unit testing – Verifies individual components. |

Performance, Load, and Stress testing – Evaluates speed and stability. |

|

Integration testing – Ensures modules work together. |

Security testing – Identifies vulnerabilities. |

|

System testing – Tests the entire application. |

Compliance testing – Ensures regulatory adherence. |

|

End-to-end testing – Validates real user workflows. |

|

|

Regression testing – Detects unintended side effects. |

|

|

Exploratory testing – Finds unexpected issues manually. |

|

When and what to automate?

Not everything should be automated. While automation speeds up repetitive tasks, some tests require human validation. The key is balancing automation with manual testing.

Automated Testing strategies:

- Unit Testing: Focuses on small, isolated components to ensure they function correctly. Detects defects early, making debugging and maintenance easier.

- Integration Testing: Verifies how different modules and external systems interact, ensuring seamless data flow and system stability.

- Functional Testing: Confirms that software features meet user expectations and business requirements.

- UI Testing: Checks for usability, responsiveness, and consistency in user interfaces across devices and platforms.

- Performance Testing: Evaluates system speed, scalability, and reliability under different loads to prevent slowdowns or crashes.

- Security Testing: Identifies vulnerabilities, ensuring the application is protected against unauthorized access and data breaches.

- Exploratory Testing: Unlike automation, exploratory testing is manual and relies on tester intuition. It helps uncover unexpected issues by testing real-world scenarios creatively.

By combining automated and manual testing effectively, teams achieve higher quality, faster releases, and reduced risks.

End-to-End (E2E) testing in Pega: ensuring business process reliability

While unit and integration tests verify individual components, E2E testing ensures the entire workflow functions as expected - from user actions to backend processes. In Pega, this means validating case lifecycles, rule-based processing, integrations, and UI interactions to catch issues before they reach production. The cost of fixing defects increases significantly as software progresses through development, as shown in the NIST (National Institute of Standards and Technology) research graph below.

Core principles for E2E testing

- Covers the entire user journey - verifies how different parts of the system work together by testing interactions across modules, APIs, databases (systems of record), and external services.

- Validates business scenarios - ensures that key workflows scenarios deliver the expected outcomes.

- Emulates real-world usage - accurately reproduces user behavior, system responses, and data flow in realistic conditions.

Types of E2E testing

- Horizontal - focuses on complete business processes that span multiple steps and systems, i.e.: submitting a service request, reviewing it, and receiving approval with a notification.

- Vertical - focuses on a single functionality tested across all application layers, from the user interface to APIs and databases. This ensures that the logic, data flow, and backend services work correctly.

Challenges

- Time-consuming & complex - requires extensive setup and maintenance.

- Expensive - demands significant resources compared to unit or integration tests.

- Flaky results - UI-dependent tests can be unstable due to dynamic elements.

Best practices

- Focus on critical workflows - prioritize high-impact user journeys.

- Automate strategically - use tools like Selenium or Cypress for repeatability.

- Optimize test environments - keep them as close to production as possible.

- Balance with unit & integration tests - E2E should complement, not replace, other testing types.

Conclusion

Automated testing is key to delivering reliable applications at scale. In modern DevOps and CI/CD environments, where frequent deployments are essential, automated tests provide a safety net, ensuring stability and preventing regressions before they impact production.

A balanced testing strategy reduces costs, accelerates releases, and improves software quality, making automation a necessity, not a luxury. By integrating automated testing into CI/CD pipelines, teams can achieve faster feedback loops, seamless deployments, and greater development agility.

In one of our upcoming articles, we’ll dive deeper into how automated testing fits into DevOps and CI/CD culture, with a special focus on how Pega Deployment Manager (PDM) streamlines automated testing, orchestrates deployments, and enhances continuous delivery workflows.

💡 How do you approach testing in your projects? Share your experiences, challenges, and best practices in the comments.