Extract case/data in real-time (BIX)

This knowledge sharing article introduces the new real-time BIX extraction feature in Pega Infinity 24.2.

This article will show how to configure a real-time BIX extraction using Kafka model and process using Data Flow.

Pega Infinity version used: 24.2.2

Client use case

Currently, client is using Pega Business Intelligence Exchange (BIX) to extract case data from BLOB to a .CSV file and upload it to a Pega Cloud repository. After that, their reporting & analytics team consumes the .CSV file, performs an ETL process, and adds the case details to a data warehouse for enterprise reporting and analytics. However, the issue is that this is done in a batch mode using Job Schedulers on a fixed schedule, which causes data latency and delays the management decision.

Client wants to see a real-time update to their reports to make a more timely decision.

Our solution is to use BIX "real-time" extraction feature to implement this requirement.

Configurations

The configurations are organized into five parts:

- Configure a Kafka/Data Set where extracted case details will be published in real-time.

- Configure a real-time BIX extract rule.

- Configure a DB table/Data Set, which will be the destination of the extracted case details.

- Configure a Data Flow to process the extracted case details from Kafka and insert/update them to a database table.

- Run a test.

## Part A - Configure a Kafka/Data Set where extracted case details will be published in real-time.

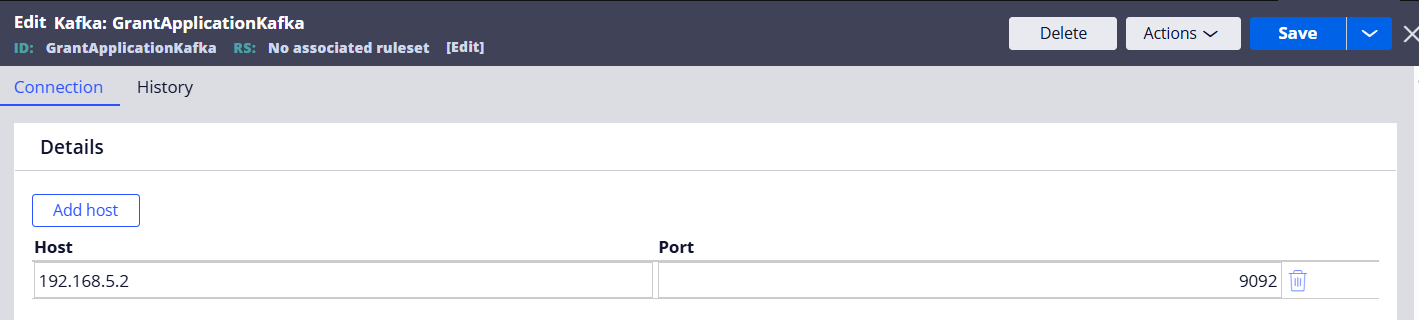

Step 1 - Create a Kafka instance.

Note: Clients should use their own external Kafka.

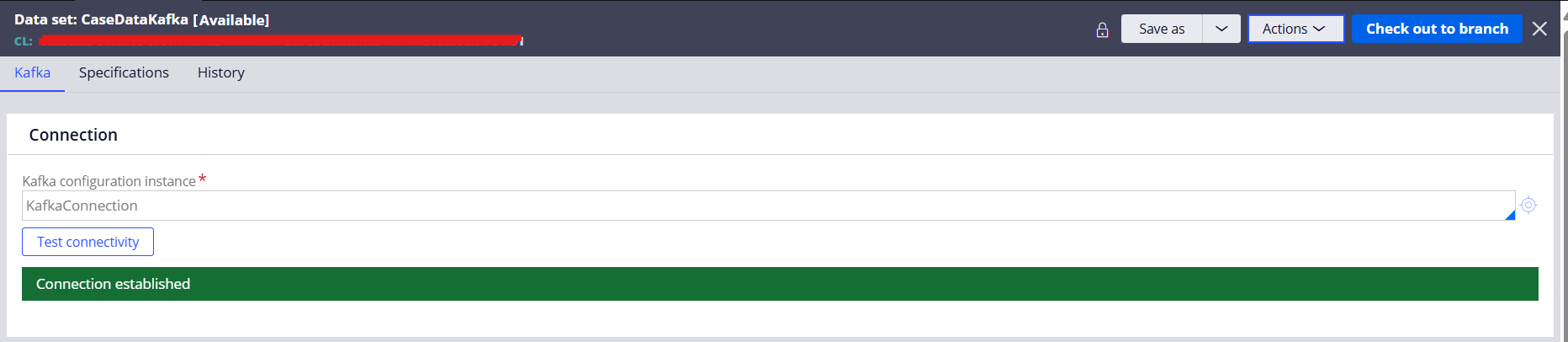

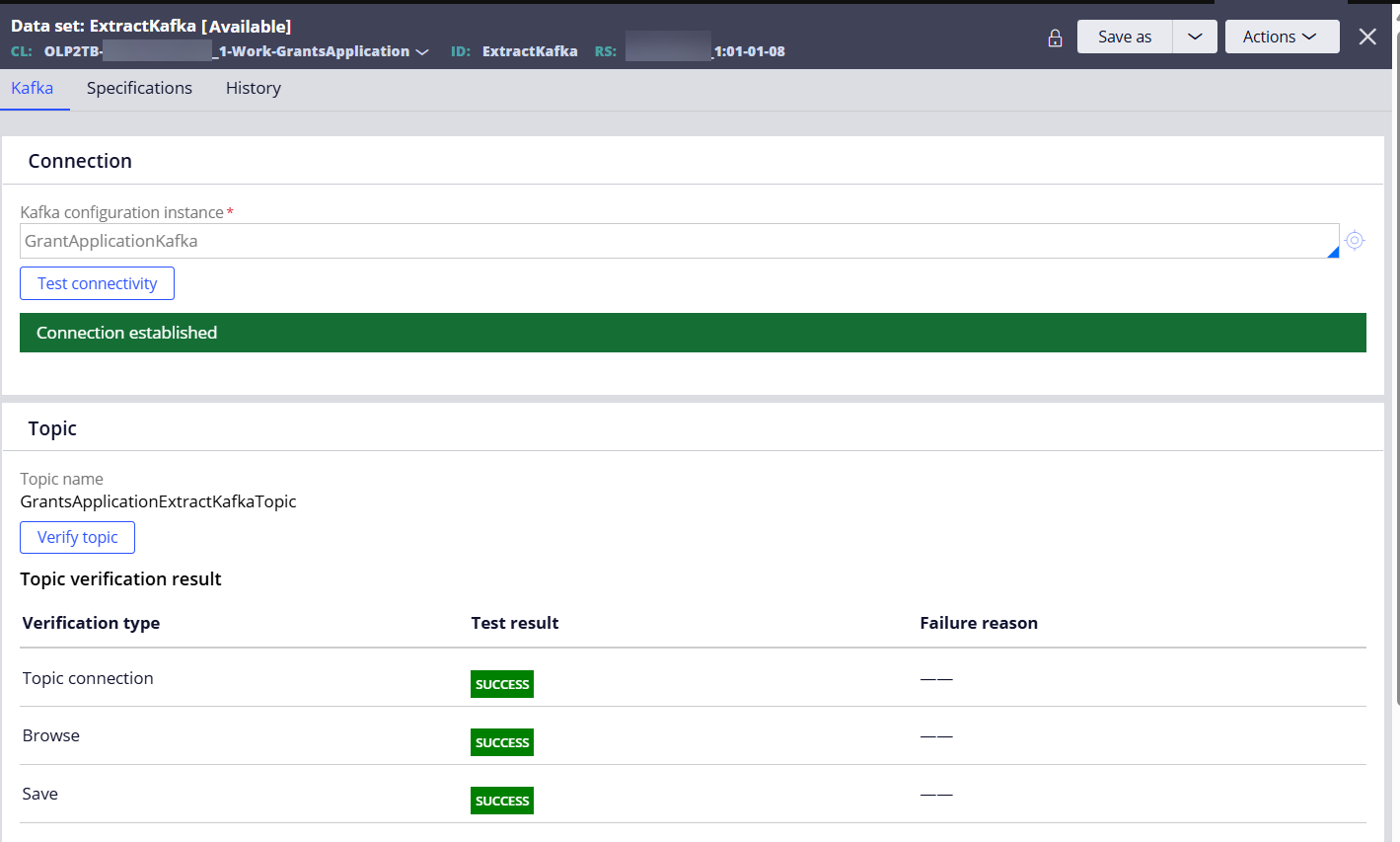

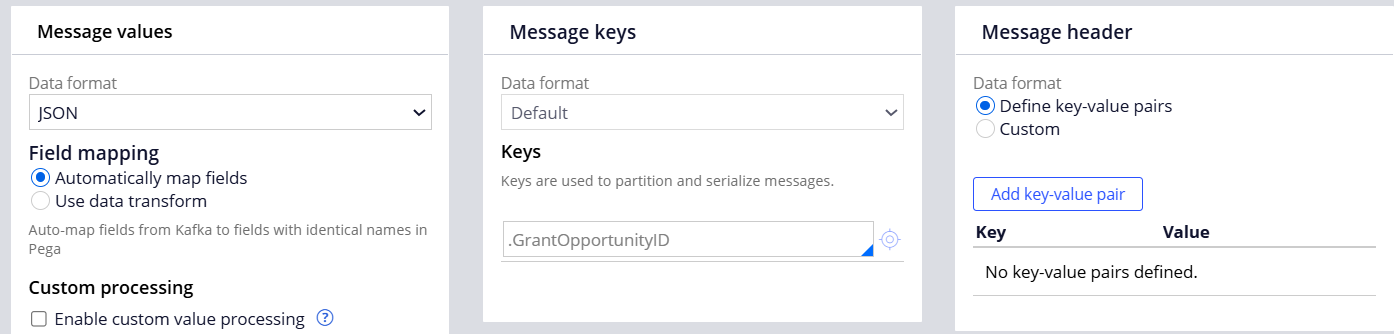

Step 2 - Create a Data Set (type=Kafka) and connect to the Kafka instance created above.

## Part B - Configure a real-time BIX extract rule.

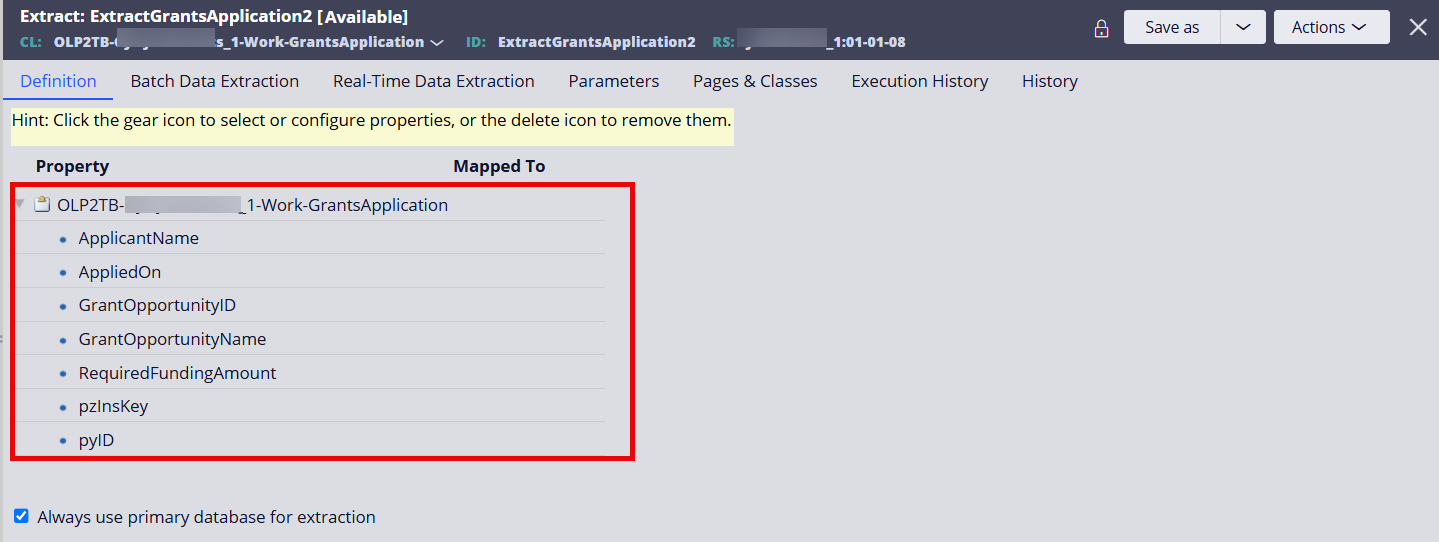

Step 1 - Create an Extract rule and select properties to extract.

We are defining the Extract rule in the GrantsApplication work class.

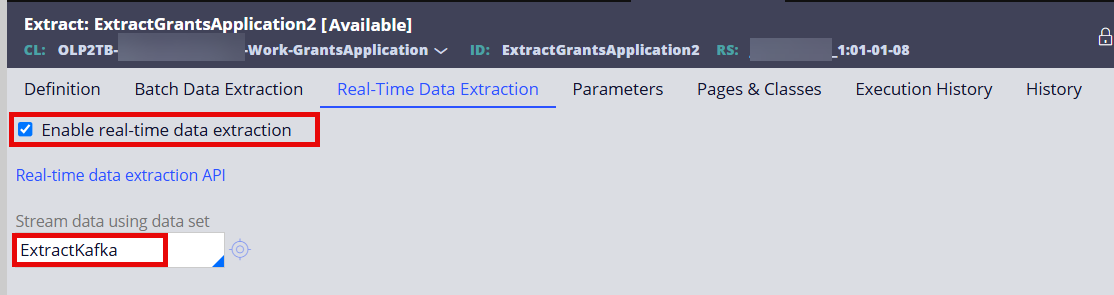

Step 2 - Select the 'Enable real-time data extraction' option and reference the Data Set (Kafka) created above.

## Part C - Configure a DB table/Data Set, which will be the destination of the extracted case details.

In this example, we will insert/update extracted case metadata to a database table after consuming them from Kafka topic.

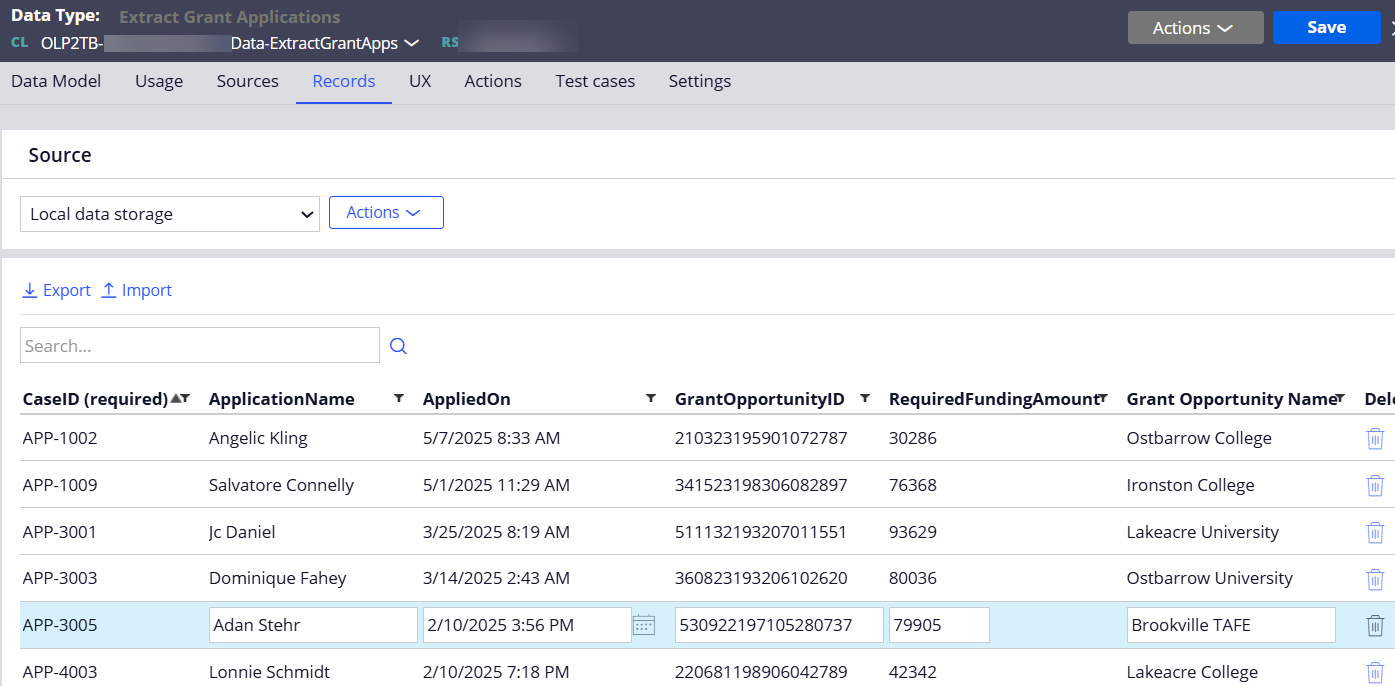

Step 1 - Create a data type table and define the properties to map the extracted case metadata.

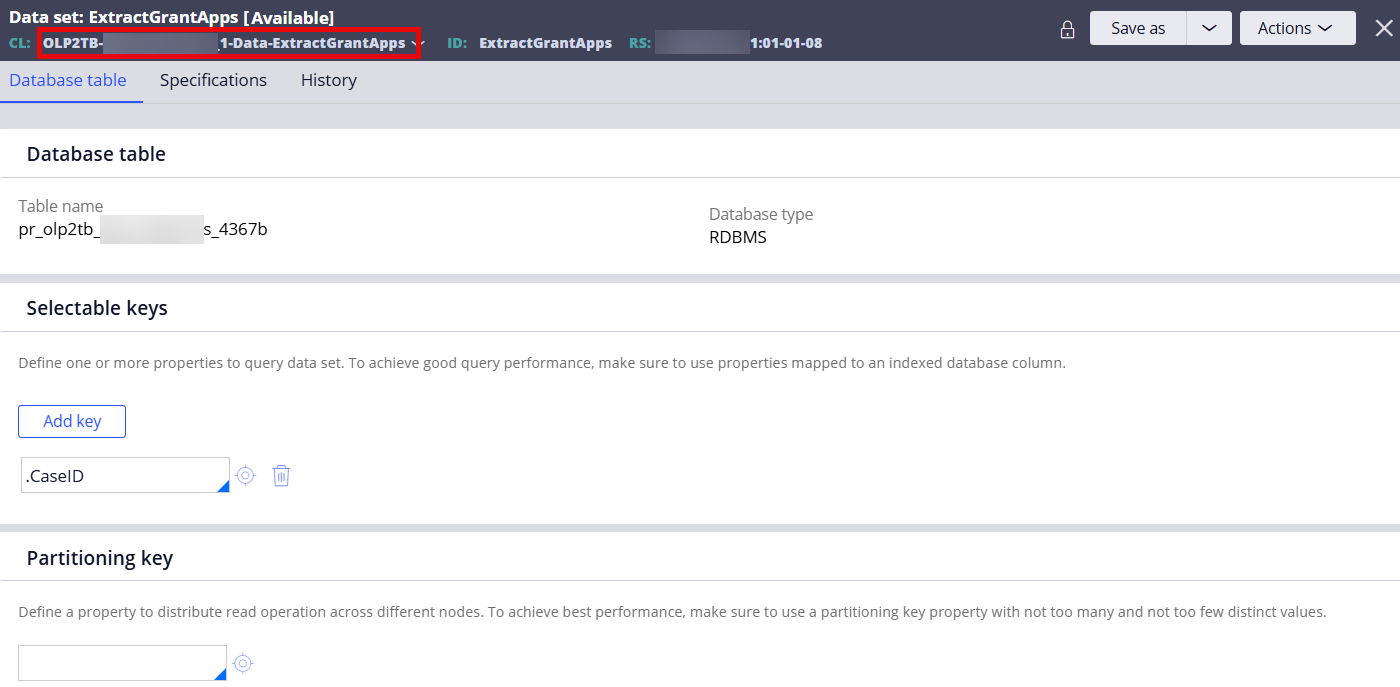

Step 2 - Create a Data Set and reference the data type class created above.

Note: Table name will be set automatically.

## Part D - Configure a Data Flow to process the extracted case details from Kafka and insert/update them to a database table.

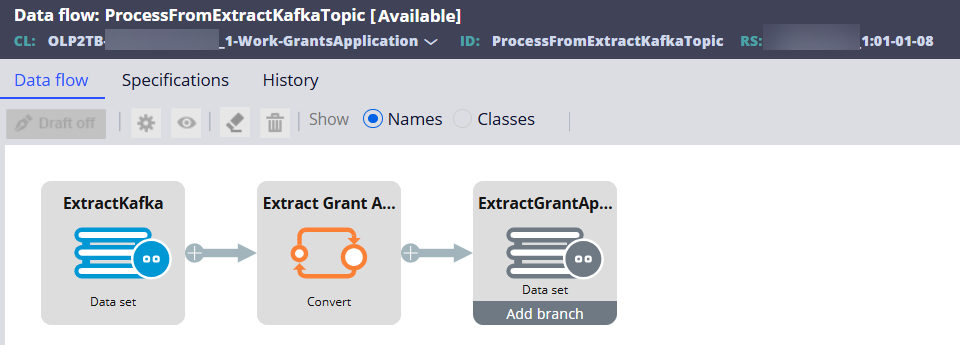

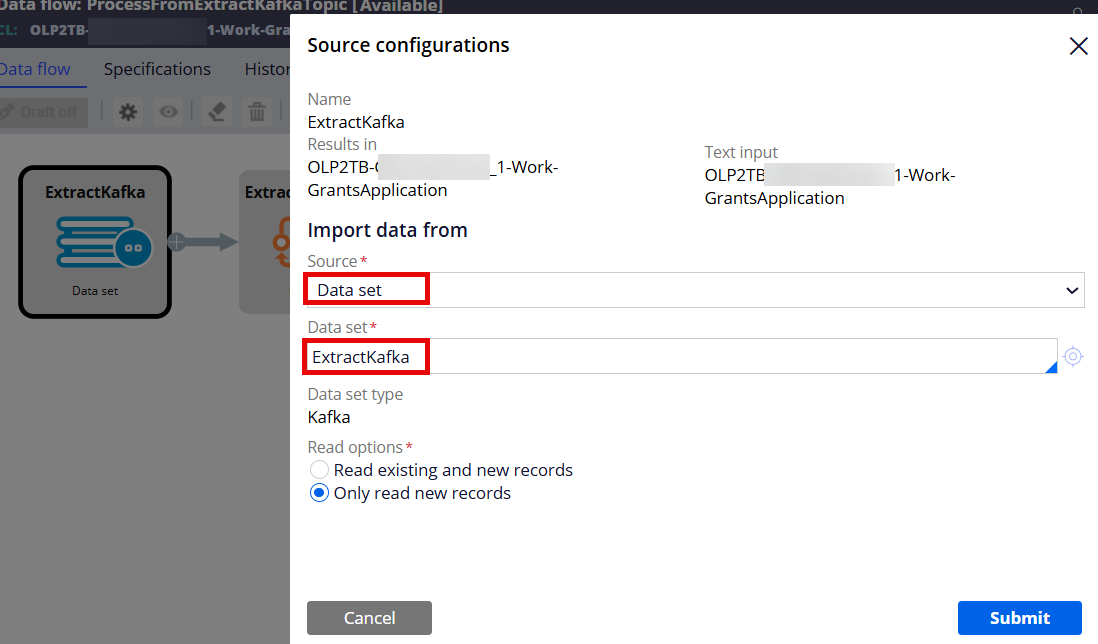

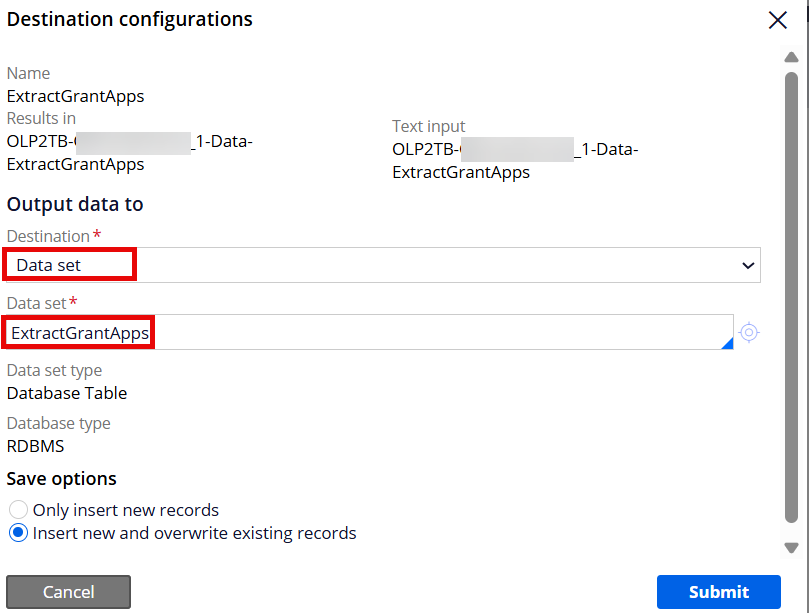

Step 1 - Create a Data Flow rule and set the source & destination.

Source: Data Set/Kafka (created above)

Destination: Data Set/Database Table (created above)

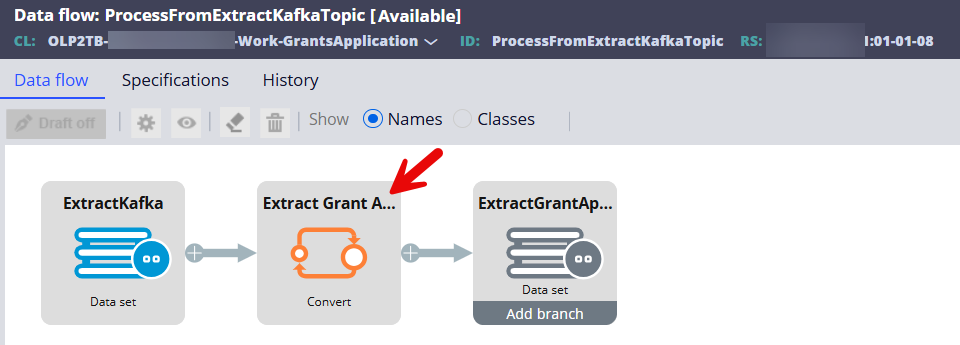

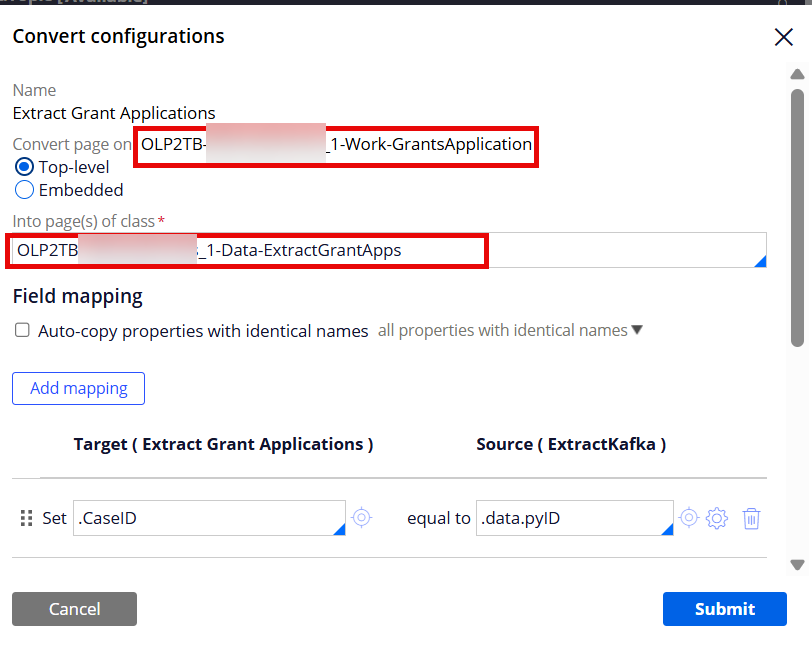

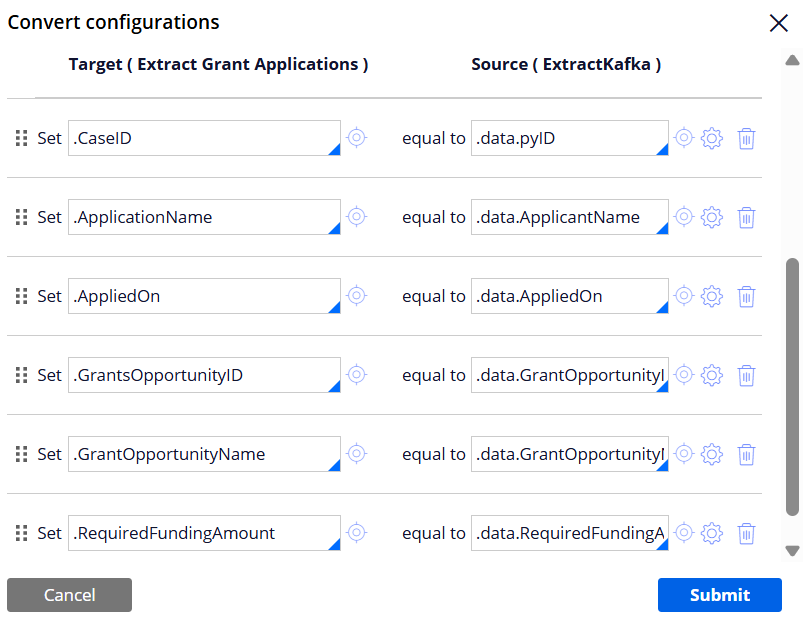

Step 2 - Insert the Convert shape to convert and map the work class properties to the data class properties.

Set the field mappings. The source comes from Kafka. Notice that the extracted source properties are placed under .data Page property. The target properties are defined in the data class.

Note: I manually created .data Singe Page property under the GrantsApplication work class and referenced the work class name in the Page definition field.

## Part E - Run a test.

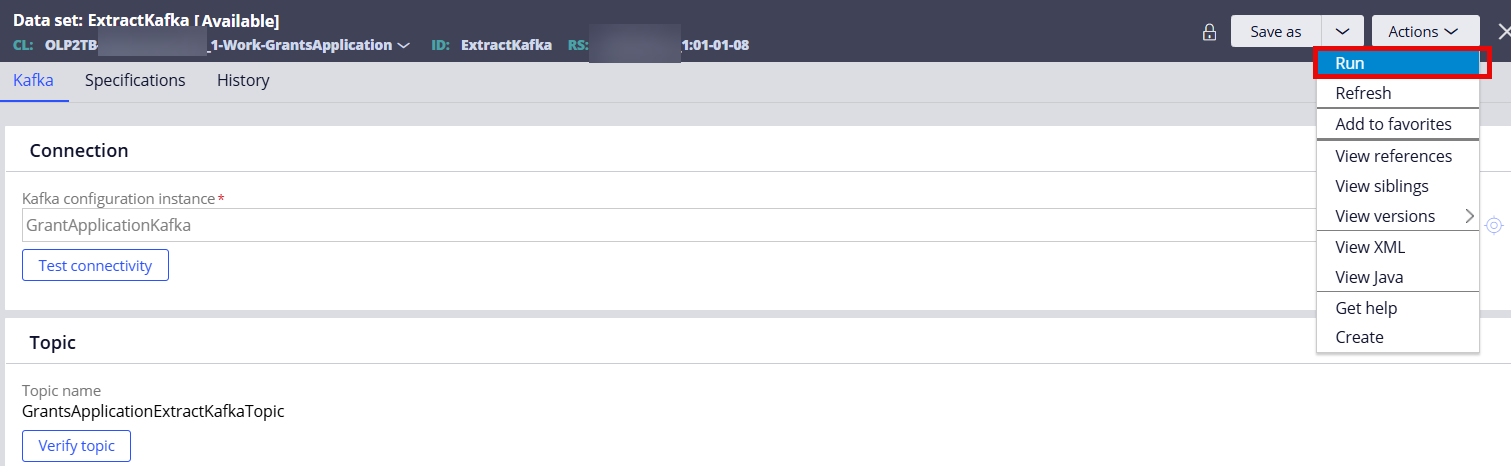

Step 1 - Start the Data Flow (Click Actions > Run)

At this point, the Data Flow is running and listening to the Kafka topic in real-time.

Step 2 - Create and submit a GrantsApplication case through different stages in its case lifecycle.

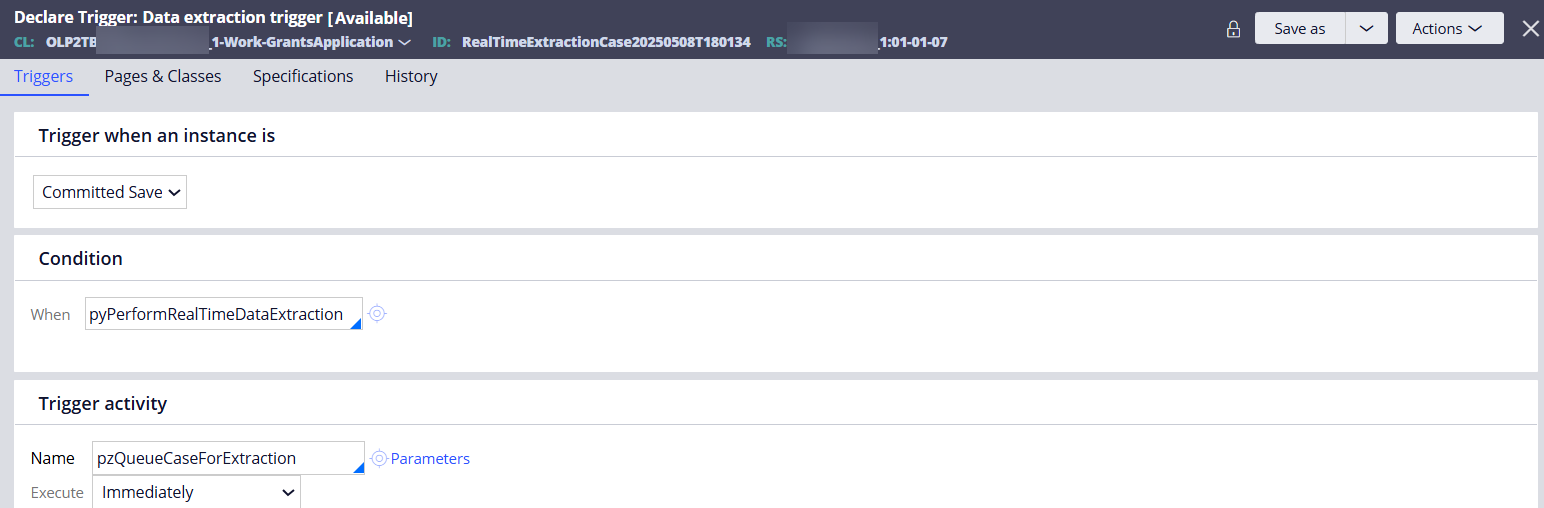

This will save/commit the case to the work table, which will trigger the real-time BIX extraction and publish the case properties to the Kafka topic.

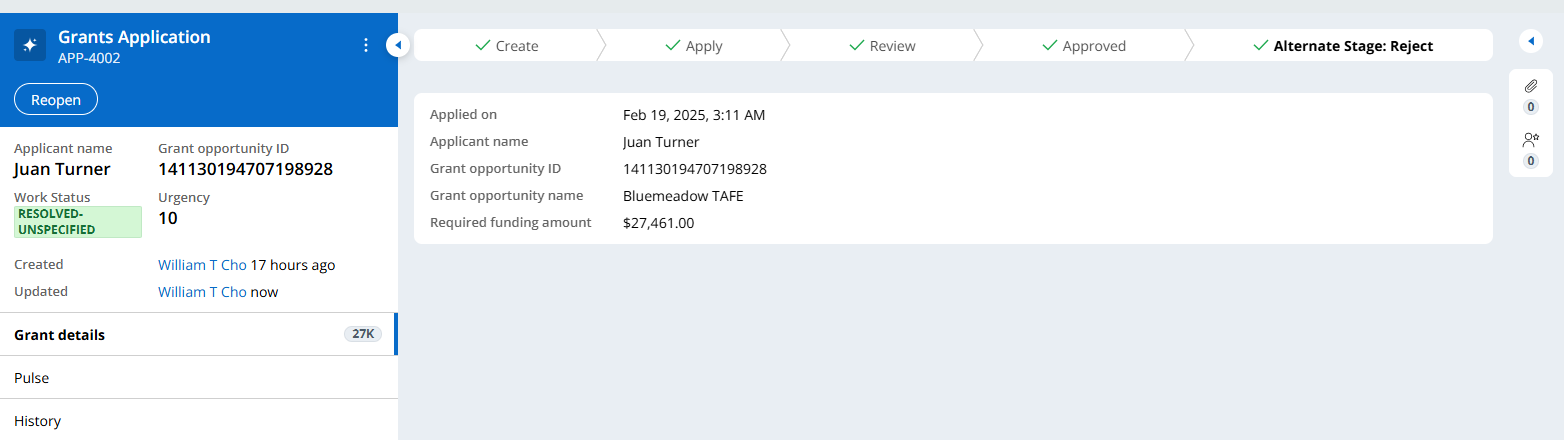

In this example, APP-4002 case had been processed and resolved.

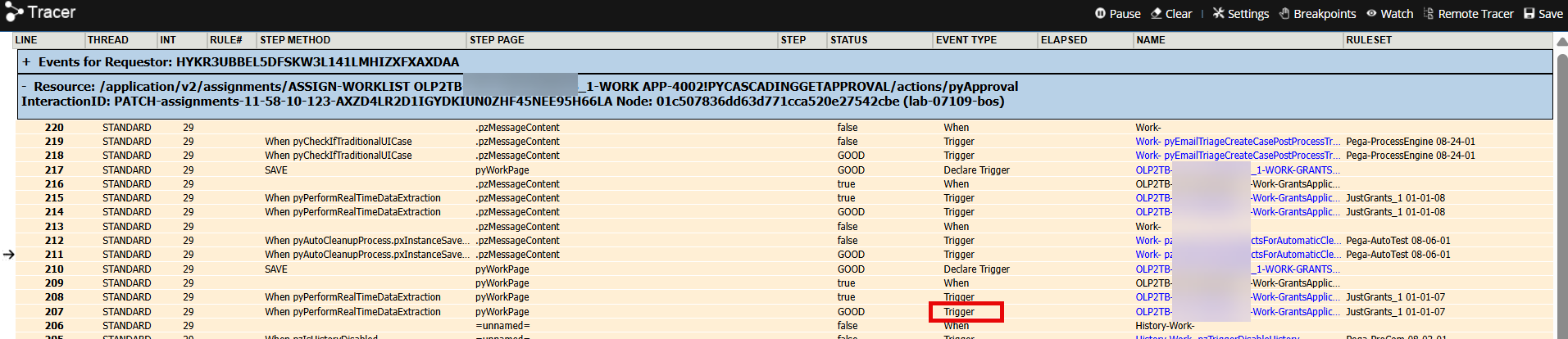

As the case is created and processed, the real-time event is fired using the Declare Trigger (auto generated).

The following Tracer shows the Declare Trigger execution, which extracts and publishes the case metadata to the Kafka topic.

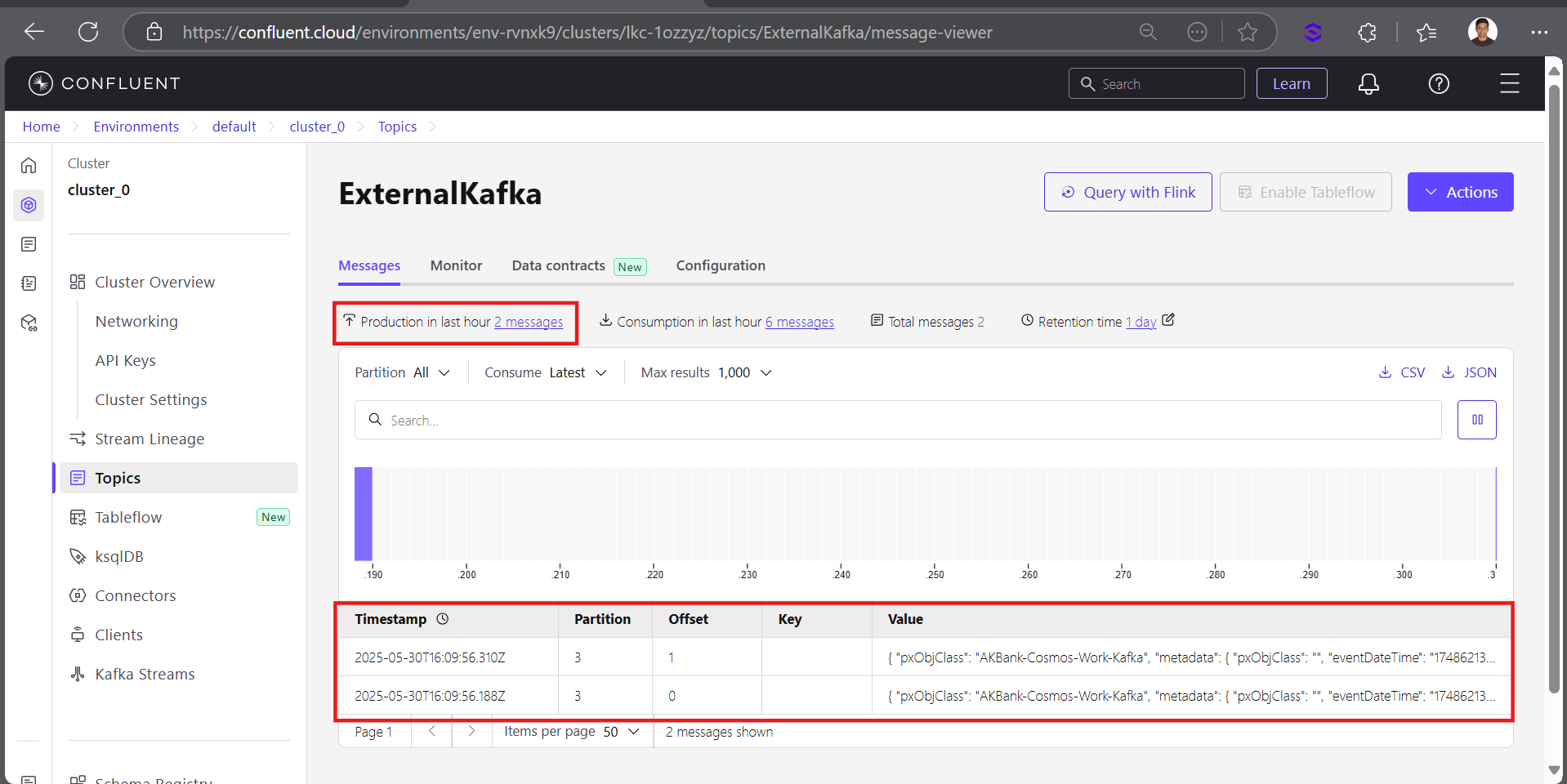

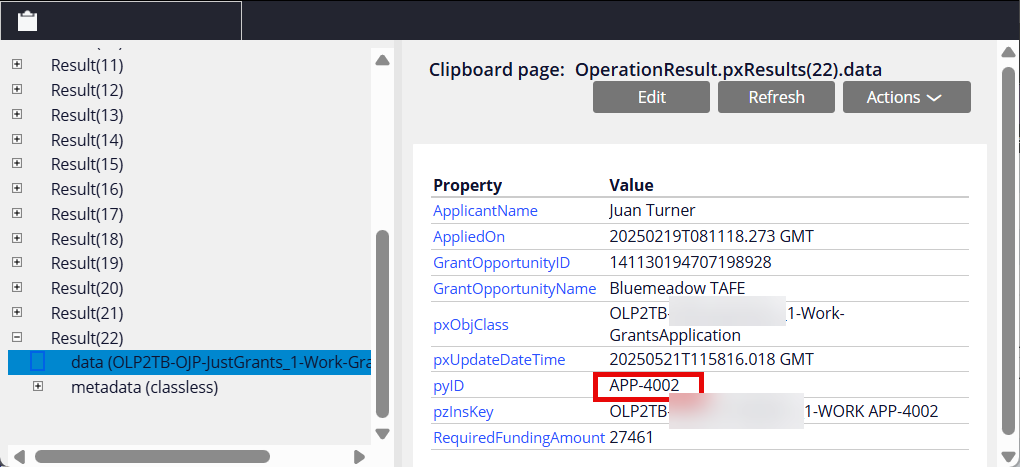

Run the Data Set (Kafka) and select the 'Browse' operation to verify the case in the Kafka topic.

As shown below, APP-4002 case details are published to the Kafka topic.

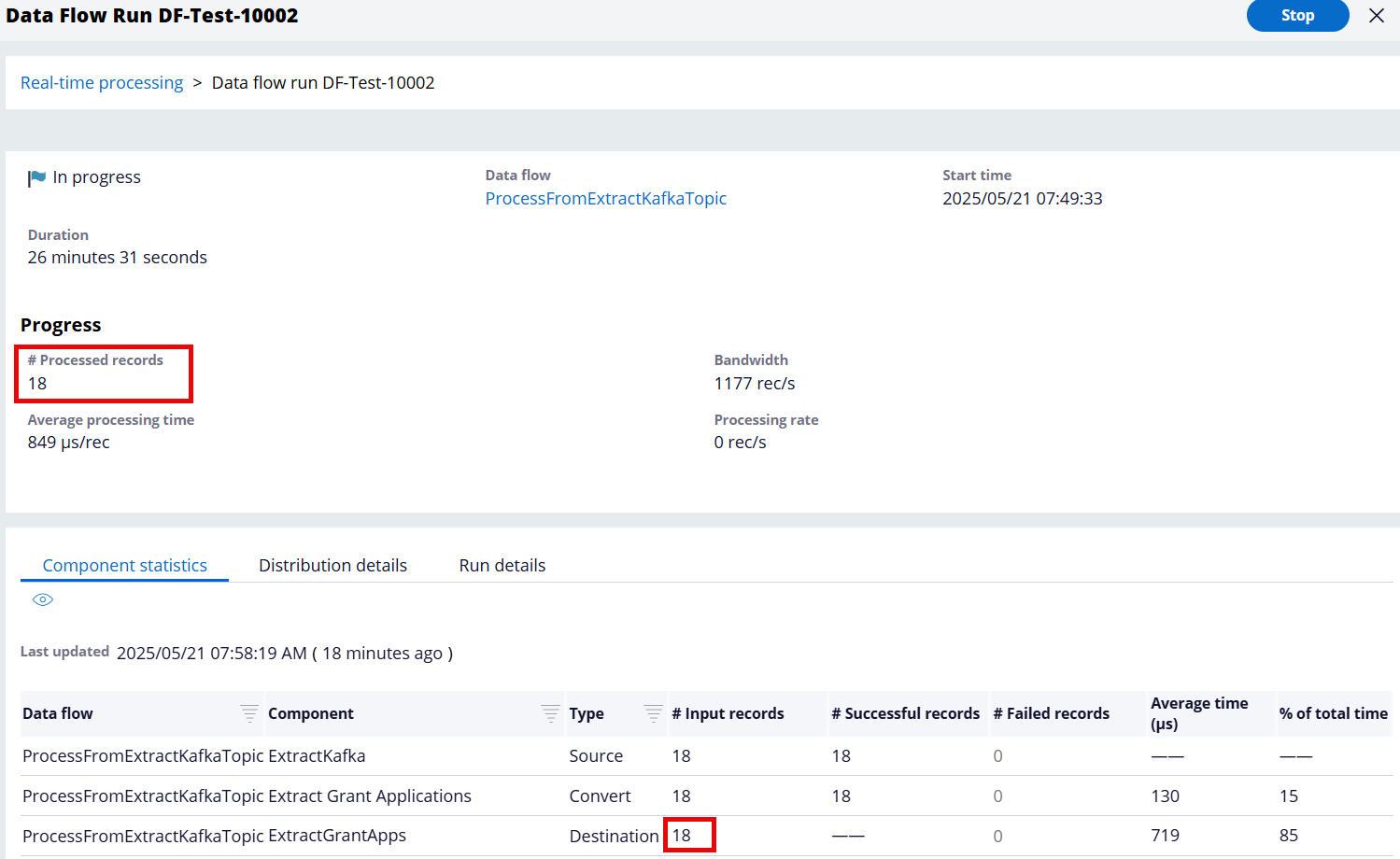

Step 3 - Verify that the Data Flow processed APP-4002 from the Kafka topic and inserted/updated the extracted case details to the database table.

In this example, 18 records got processed and updated to the destination (database table). These 18 records were added to the Kafka topic when the Declare Trigger was executed after the case got processed through different stages and saved/committed to the work table.

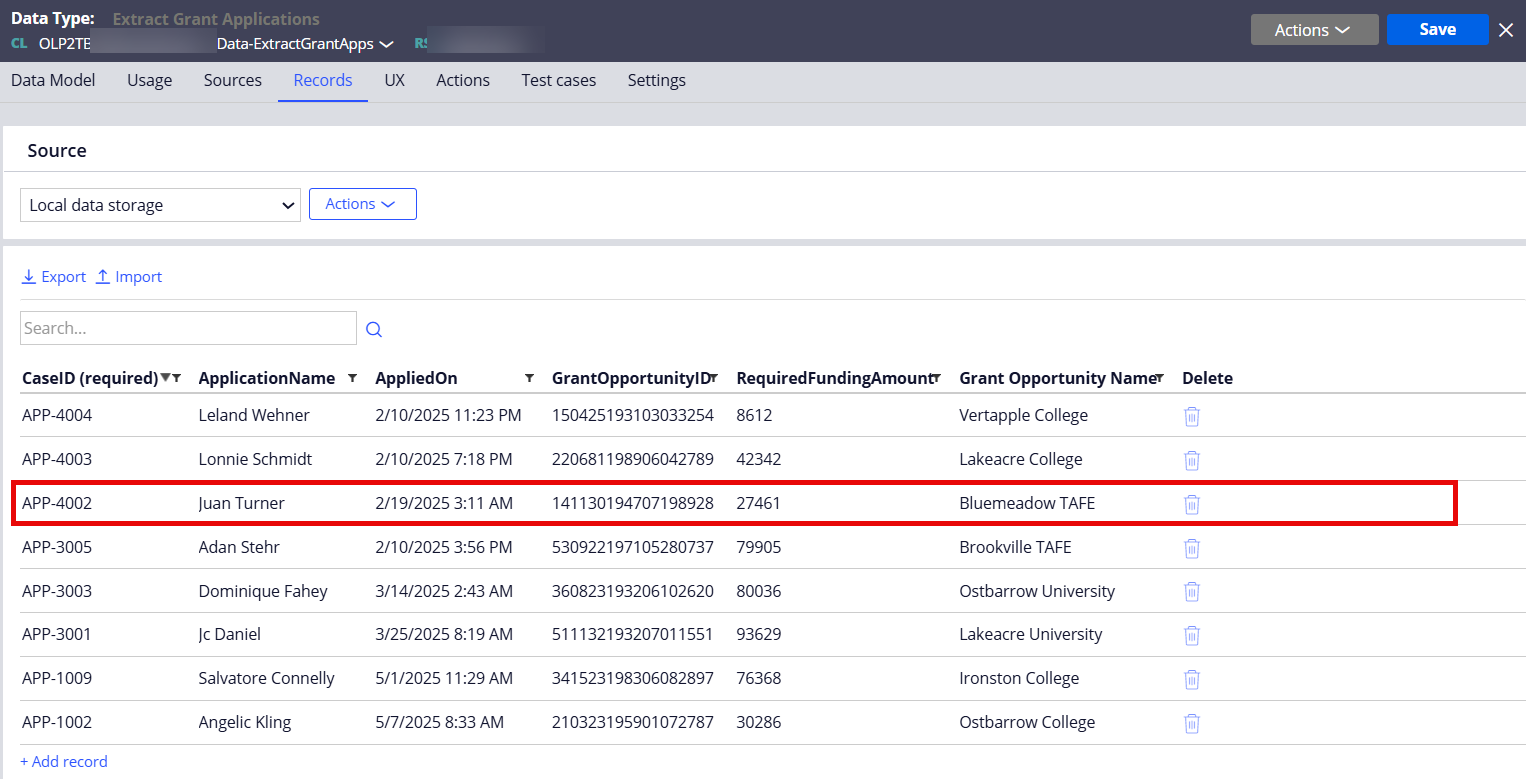

The following data type table (which is the destination of the Data Flow) now contains the APP-4002 case metadata.

Additional information

-

We need to set the 'EnableDataExtraction' release toggle ON to make the real-time extraction to work.

-

Found this BIX FAQ useful: https://docs.pega.com/bundle/platform/page/platform/reporting/bix-faq.h…

-

I'm hearing that Data Flow might be a separate license. Please check with your Pega account contact.

-

When a case was manually submitted and saved/committed to DB, the real-time BIX extract was triggering as expected. However, when I created and saved/committed cases using a background process by Data Flow (Batch), the real-time BIX extract was NOT firing. Created INC-C21257 to validate this observation. I would expect the real-time extraction to work for both scenarios. Response from Pega Engineering (5/22/25): For data flow, the declare triggers are disabled by default due to performance issues.

-

Ensure that your application is added to the System Runtime context. It is required for the standard queue processor activity "pzProcessChangeDataCaptureCaseTypes" invoked by the real-time extract Declare Trigger rule.

-

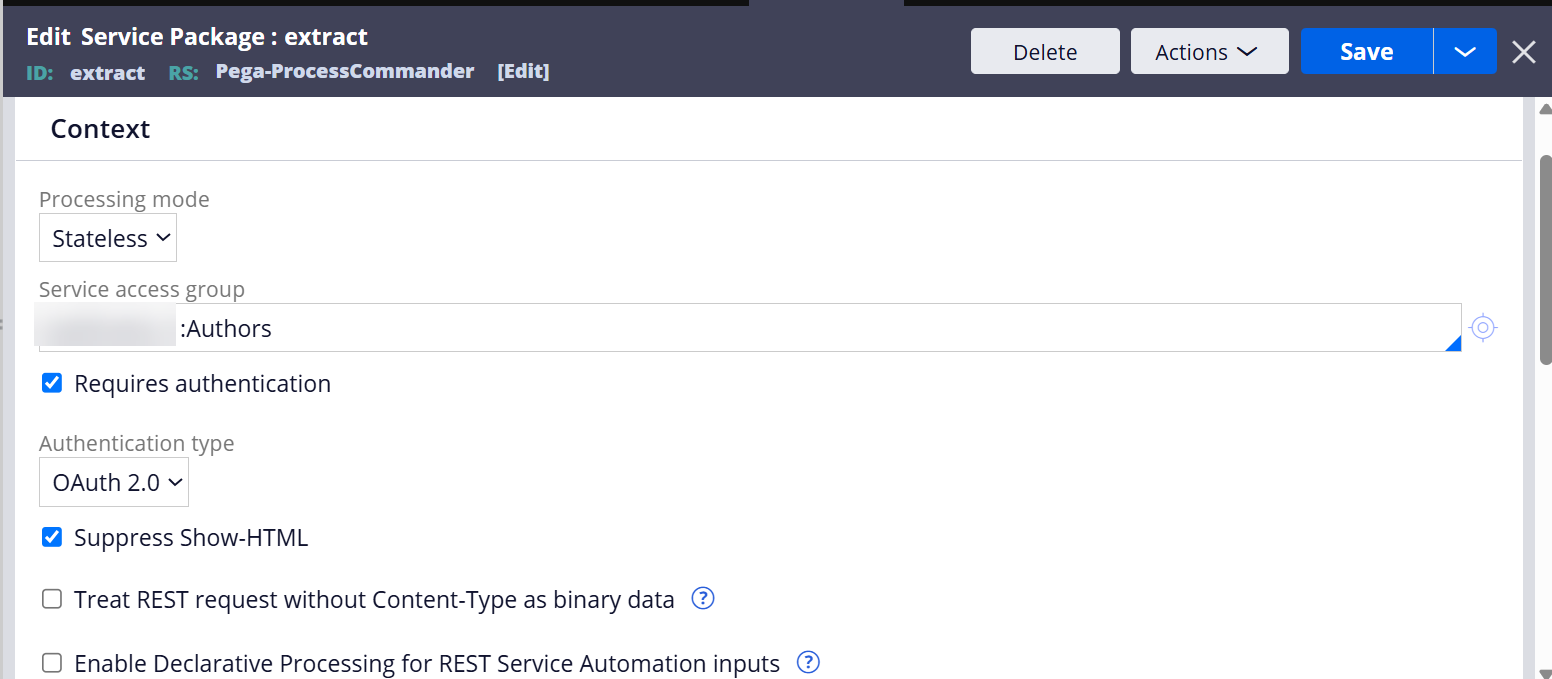

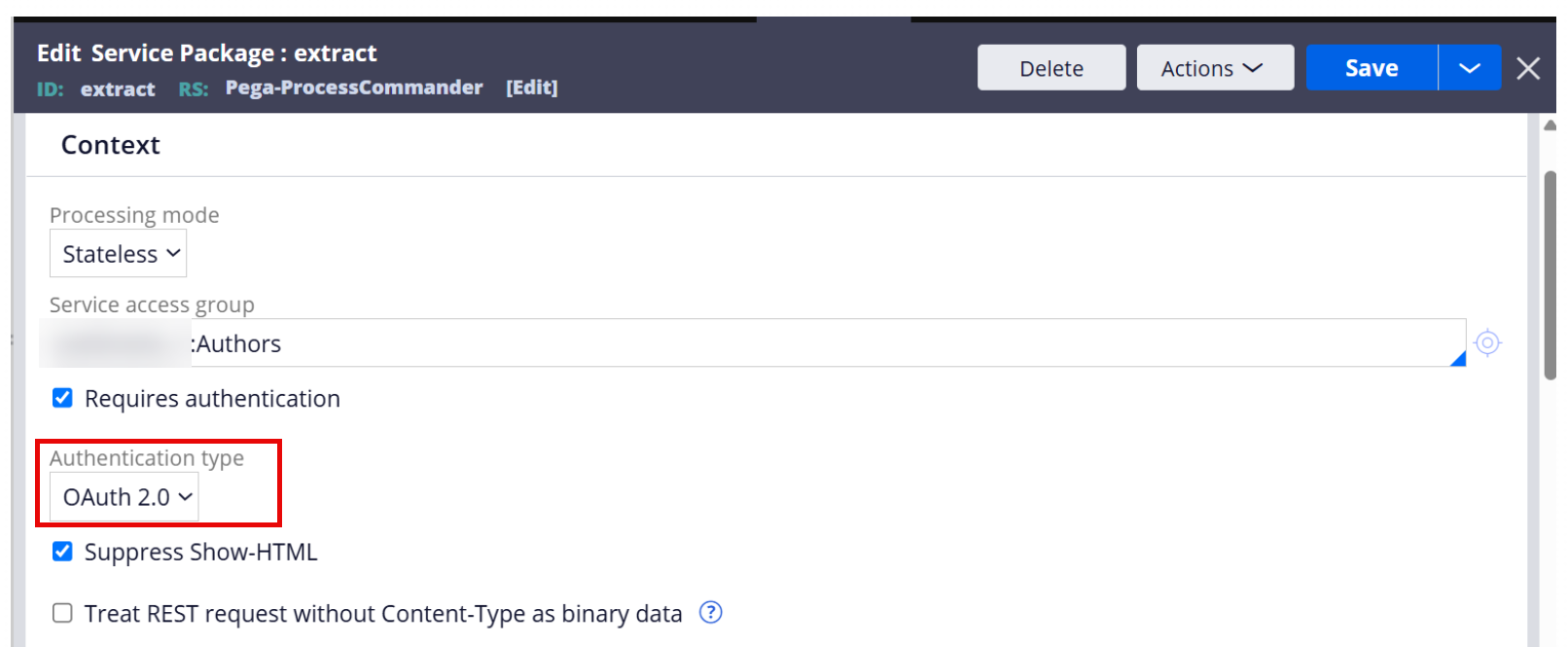

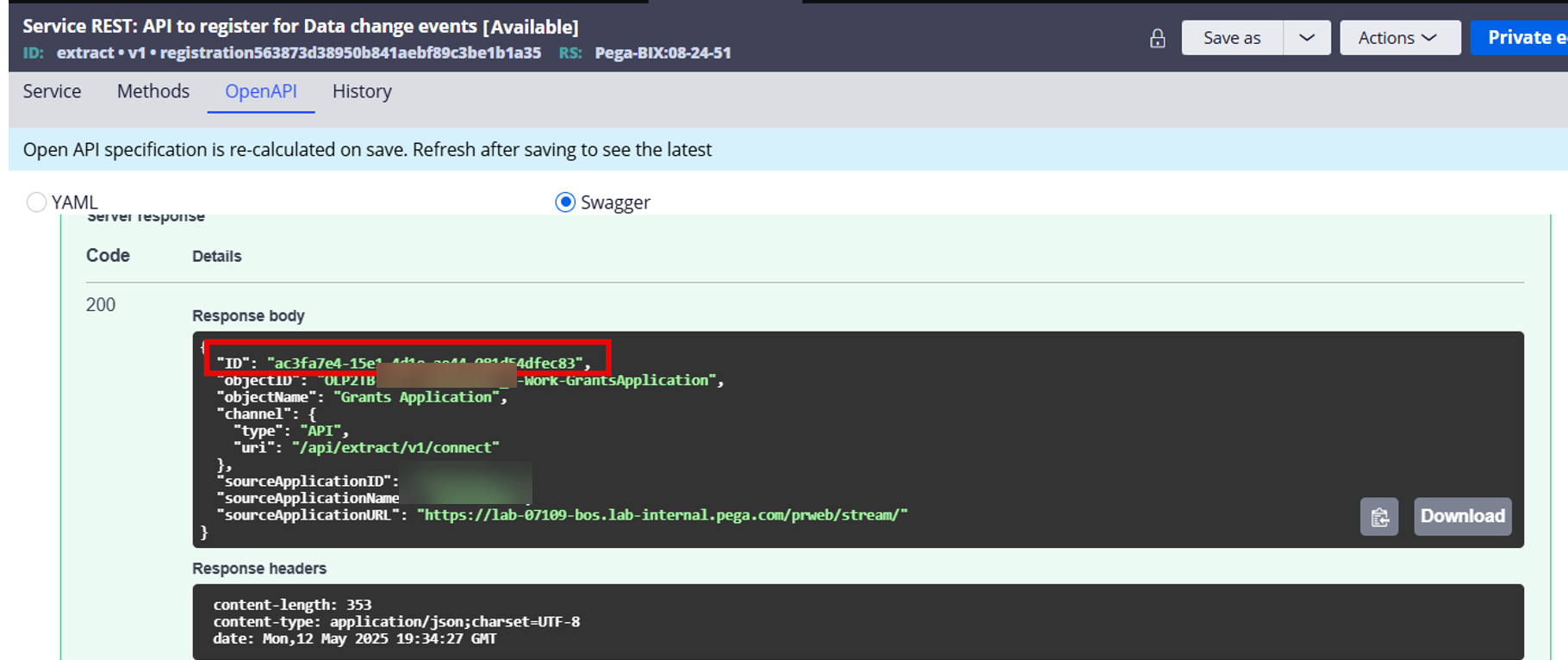

If you want to try the BIX extract APIs, refer to the draft document attachd. Product Team is currently working to publish a more formal documentations. In addition, if you go to the 'OpenAPI' tab in the Service REST' rule form, you can also find more API details. Here is the example of api/extract/v1/registration and a sample response. The registration ID will be passed to the api/extract/v1/connect/{reg ID} to get the extracted case/data (see a sample response JSON below).

- Here is a sample response JSON of the connect api.

{

"metadata": {

"eventDateTime": 1747160313341,

"eventType": "UPDATE",

"messageType": "EVENT",

"objClassName": "OLP2TB-XXX-XXX-Work-GrantsApplication",

"objType": "CASE",

"primaryKeys": "OLP2TB-XXX-XXX-WORK APP-1001"

},

"data": {

"pxObjClass": "OLP2TB-XXX-XXX-Work-GrantsApplication",

"pyID": "APP-1001",

"GrantOpportunityID": "130534193601314026",

"ApplicantName": "Gilma Shanahan",

"GrantOpportunityName": "Brighthurst University",

"pzInsKey": "OLP2TB-XXX-XXX-WORK APP-1001",

"AppliedOn": "20250227T222010.478 GMT",

"RequiredFundingAmount": "36171",

"pxUpdateDateTime": 1747160313341

}

}

- Currently, there are two recommended approaches - 1) Use the provided APIs to read from internal kafka, or 2) Use an external Kafka.

Please leave any question or comment.