Discussion

Pegasystems Inc.

NL

Last activity: 4 Dec 2025 10:51 EST

Knowledge Buddy: Mastering the Diagnostic Quality Chain

Great, you’ve configured your first Knowledge Buddy for your customer, and the proof-of-concept was a success, now you want to bring this functionality to Production, so your users can benefit from its power.

But, there are concerns about the quality of the answers the Buddy provides, and your team is stuck in a cycle of randomly tweaking settings, hoping for a fix.

That’s not an effective approach.

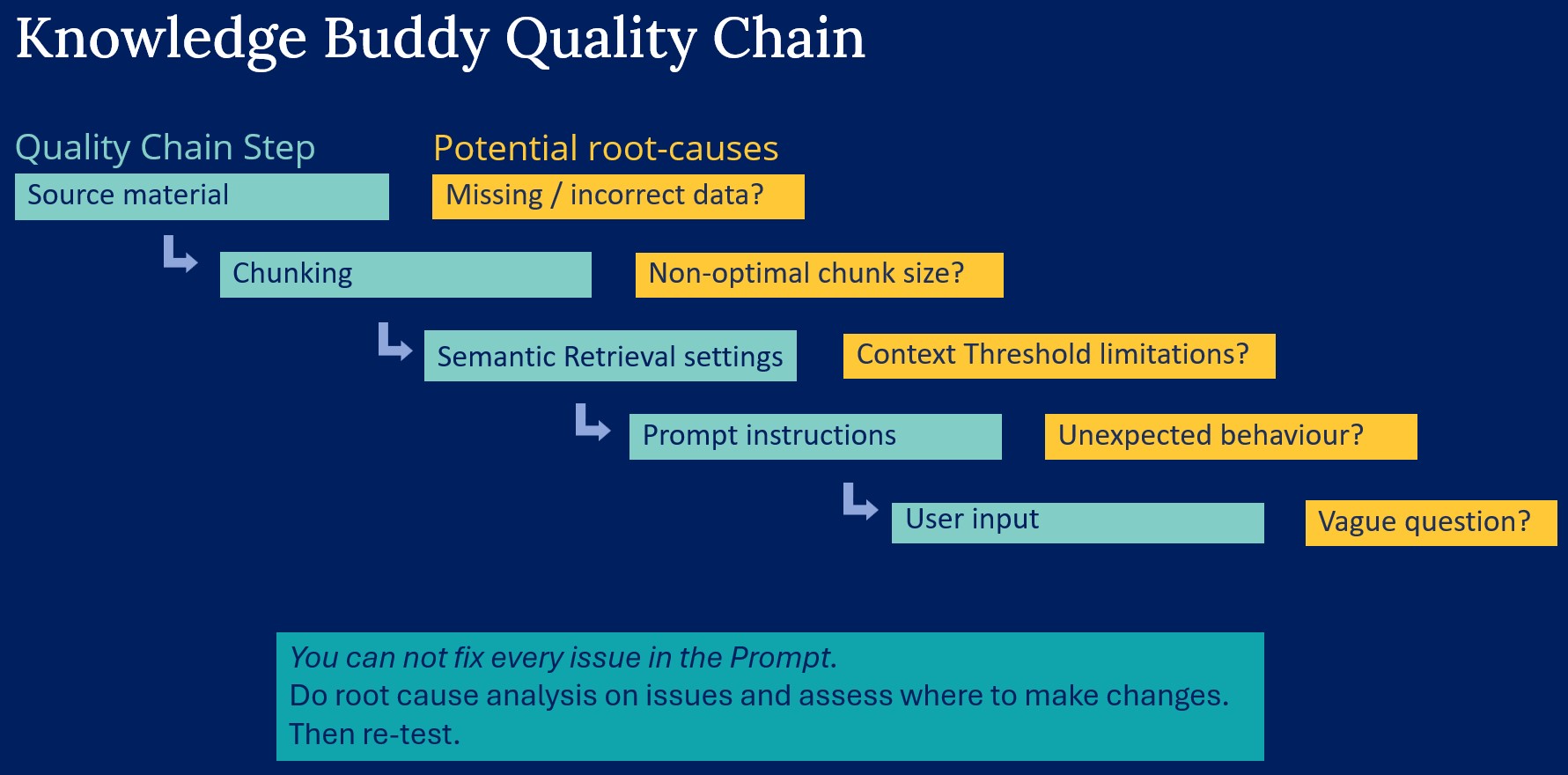

Delivering a trusted solution requires a shift from frantic tuning to systematic diagnosis. The quality of an answer is not determined by a single setting, but by a sequence of factors I call the Quality Chain. A weakness in any one link compromises the entire chain.

To diagnose issues and optimize performance, you must inspect each link.

The Foundation

Before you inspect the links of the quality chain, you must establish your strategic foundation.

Adopt the GenAI Mindset

Optimizing a non-deterministic system can be frustrating for developers accustomed to clear-cut bugs that can be fixed and quickly tested.

You need to think like a scientist who forms a hypothesis, runs an experiment, and analyzes the result. This demands tenacity, but also the pragmatism to recognize when an outcome is good enough to deliver business value.

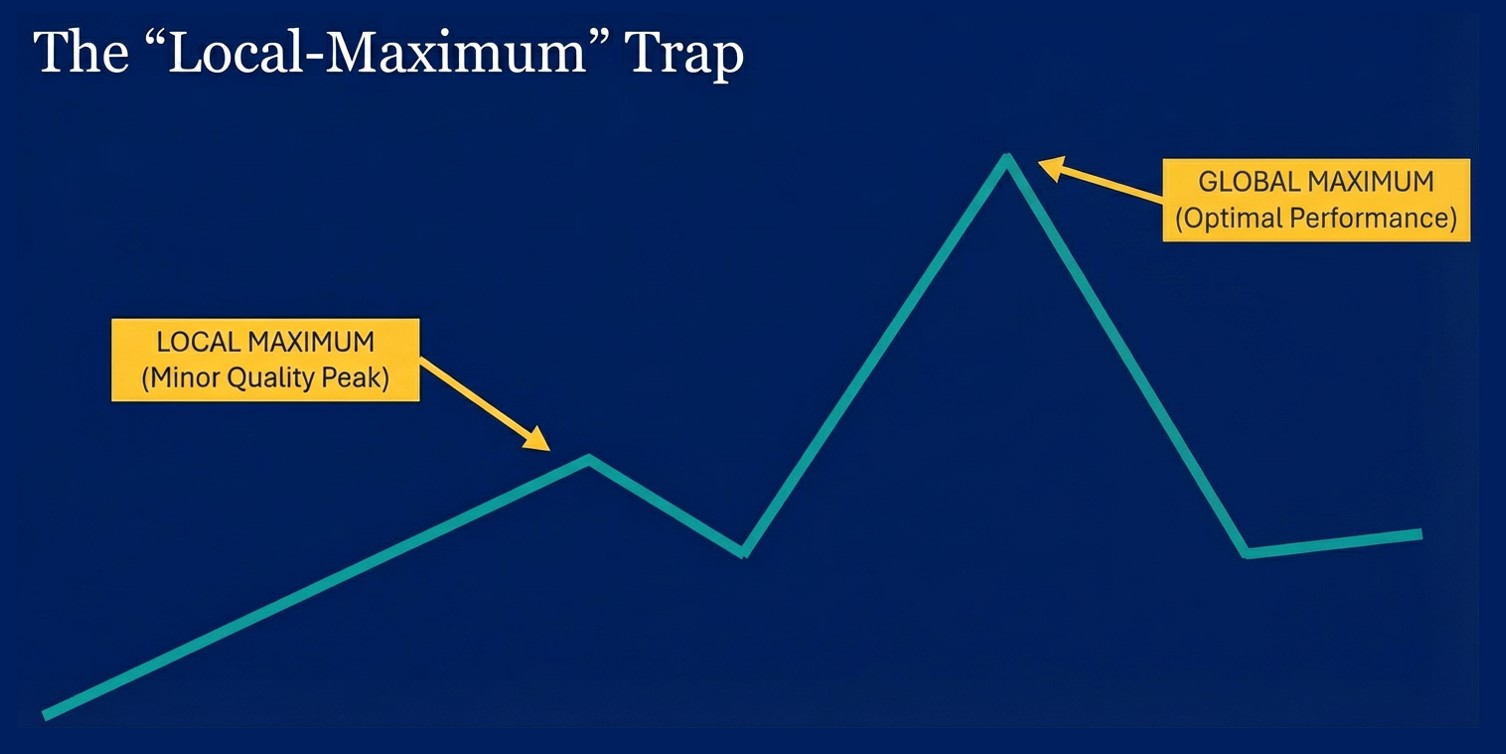

During the tuning process, you must avoid the ‘local-maximum’ trap, where small tweaks lead to a minor quality peak. The best possible outcome may require a completely different set of parameters. Therefore, always start with broad experimentation before honing in on the details.

Define "Good"

Second, you cannot hit a target you haven't defined. Work with business stakeholders to establish exactly what a "good answer" looks like for your specific use case. Should the answer be a brief summary? A list of steps? A direct quote from the source documents? This definition will guide all further tuning.

Establish Ground Truth

Work with business experts to build a curated list of representative questions with ideal answers, your ‘golden set’. This is not optional. This dataset is the single most critical asset for the project. It provides the objective benchmark you will use to validate configuration changes and prove that the system is solid.

Diagnosing the Quality Chain

With your strategic foundation in place, you can now systematically inspect each link in the Quality Chain.

Link 1: Source Material

The quality chain begins here. Your Knowledge Buddy can only provide answers based on the material it has access to. If there are knowledge gaps in your source articles, the Buddy cannot generate correct information.

- Work with the content owners to assess the AI readiness of the content. There are many practical tips here, think of assessing information ‘hidden’ in images, complex table structures, etc.

- Close the feedback loop: set up a governance mechanism to review user feedback that might indicate context gaps, so the knowledge source can be improved.

Link 2: Chunking

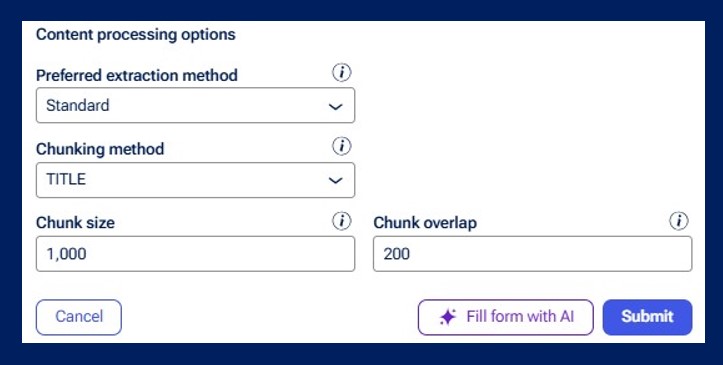

This step determines how your knowledge articles are broken down into chunks for the Buddy, which are stored in the Vector Store. The chunk size and overlap settings are critical for ensuring the right context is found and passed to the LLM.

- If you are connecting with a content store outside of Pega, plan and test the connectivity of this integration early.

- The right chunking strategy depends on your content, there is no one-size-fits-all option. To find the optimal approach, create multiple test datasets with different chunking strategies and compare their performance against a baseline set of questions.

Link 3: Semantic Retrieval Settings

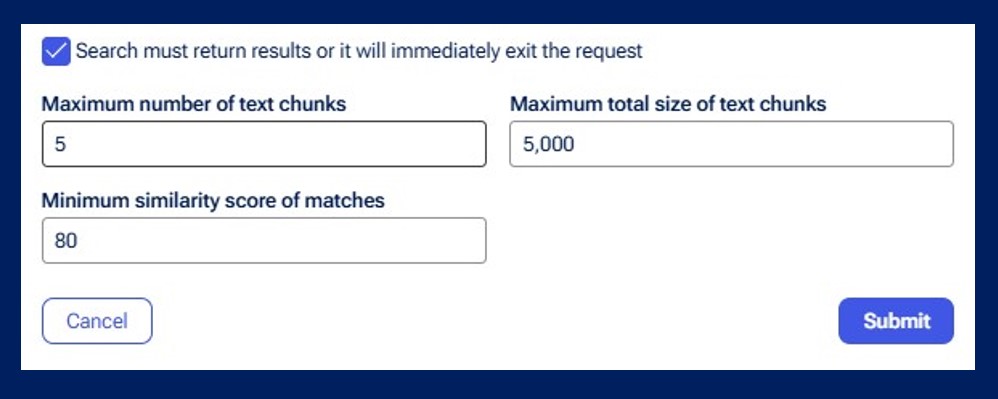

These settings act as the gatekeeper for what information reaches the LLM. Parameters like the similarity score threshold and the number of chunks the Semantic Search retrieves have a significant impact on outcomes. Setting these thresholds too high risks excluding the correct answer. Setting them too low introduces noise that makes it hard for the LLM to find the answer.

- To effectively compare different configurations, use Linked Buddies to try out various threshold settings in parallel. This allows for a side-by-side comparison of how different settings affect answer quality.

Link 4: Prompt Instructions

The wording of your instructions can be the difference between great and mediocre answers. However, instruction tuning is most effective when the preceding links are strong.

- Overly long and complex prompts can cause the model to ignore certain constraints. Assess what the minimum set of instructions is to reach your desired outcome.

- LLMs are designed to be helpful, which means they will try to answer a question even with poor context. We typically want our Buddies to ONLY provide answers that are sourced from the context we provide. It is critical that you do not leave ‘gaps’ in your prompt instructions that give the LLM a reason to go outside the context provided.

- Teams often look at prompt instructions first when something goes wrong. They have a big impact, but remember that not everything can be fixed with prompting.

Link 5: User input

The user is the final link in the chain. Even with a perfectly tuned Buddy, unclear questions will result in poor outcomes.

- Guide your users on how to ask effective questions. A useful analogy: Ask the question as if you were asking a colleague who has no context for what you are working on.

- Build user confidence with a phased UAT and Pilot plan. Start with a small group of end-user representatives who know they will see preliminary results. Use their feedback and question patterns to improve the Buddy and your user guidance. Pilot users can then also act as ambassadors for new users.

From a reactive to an intentional quality approach

Moving a Knowledge Buddy from a PoC to a trusted Production solution requires attention. It means evolving from a reactive tuner who randomly adjusts prompts to a strategic architect who diagnoses issues methodically across the entire Quality Chain.

By building on a strong foundation and analyzing each link in sequence, you replace guesswork with a repeatable, evidence-based process. This is how you deliver GenAI solutions that that users can trust.