Archive case and its associated artifacts

This article demonstrates the step-by-step configurations of how to archive old, resolved cases and their associated artifacts (such as child cases, work history and attachments) in Pega.

Client use case

Client has been running Pega application for many years and the volume of resolved cases and associated data (such as history, attachments) have grown very large in size. This has been increasing the database usage and also adding an overhead to the database performance. The client requirement is to archive them to the secondary storage repository that still allows to search and view the cases but purge them from the primary database.

Configuration steps

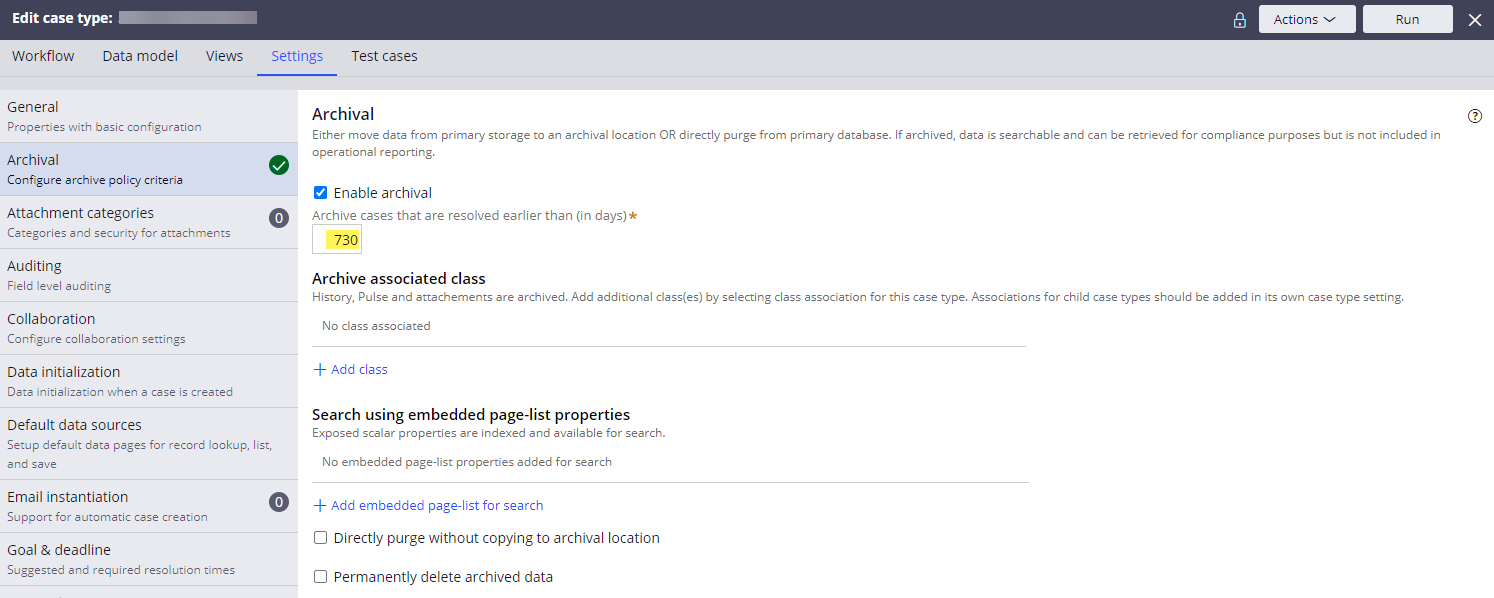

Step 1 - Configure the archival policy for a case type.

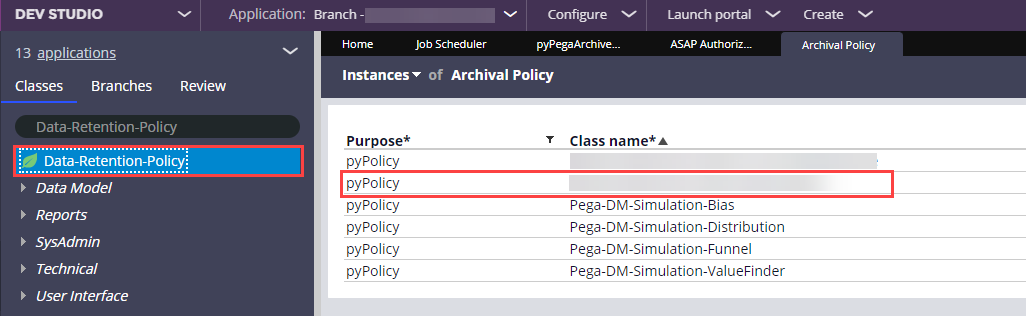

Once saved, this creates a new data instance under the Data-Retention-Policy class.

Any additional update to the case archiving policy can be made to the Data-Retention-Policy instance directly, which will also automatically reflect the change in the case type editor.

To set the archiving policy in upper environments, we packaged the Data-Retention-Policy instance from DEV and migrated. After that, we can directly update the instance as needed in each environment (i.e. we could configure a different archiving policy per environment).

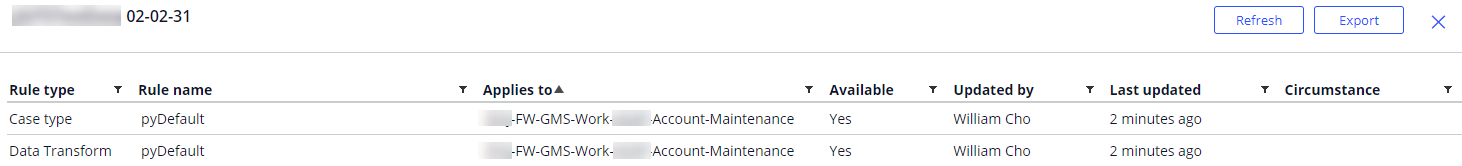

Note: we had to temporarily create an unlocked ruleset version to save the new archival policy configuration from the 'Edit case type' page. Upon the save, the platform auto generated following two rules (pyDefault case type & pyDefault data transform). After a new Data-Retention-Policy instance is created, we deleted the two generated rules and temporary ruleset version and migrated only the Data-Retention-Policy instance to higher environments.

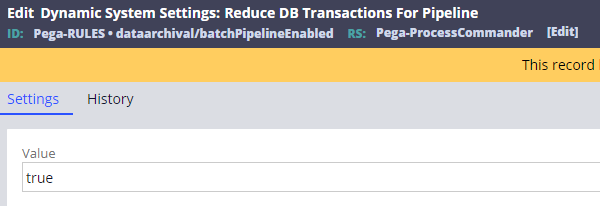

Step 2 - Set dataarchival/batchPipelineEnabled DSS to 'true'.

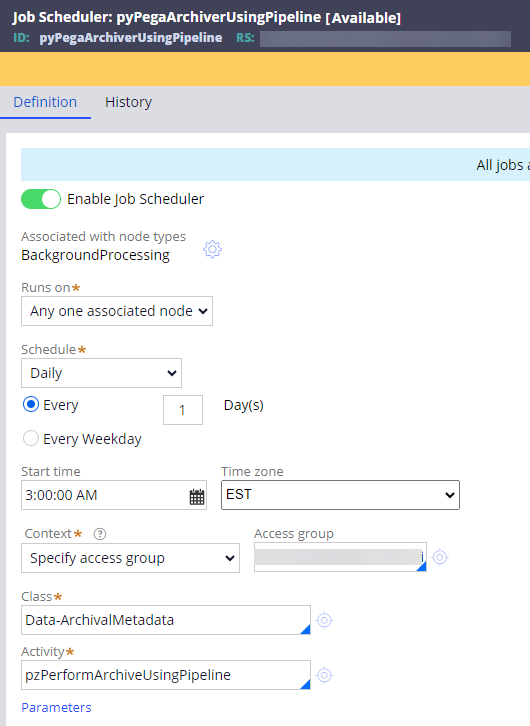

Step 3 - Enable the pyPegaArchiverUsingPipeline job scheduler and set the schedule.

- Save the OOTB job scheduler to your application ruleset and modify.

Validations

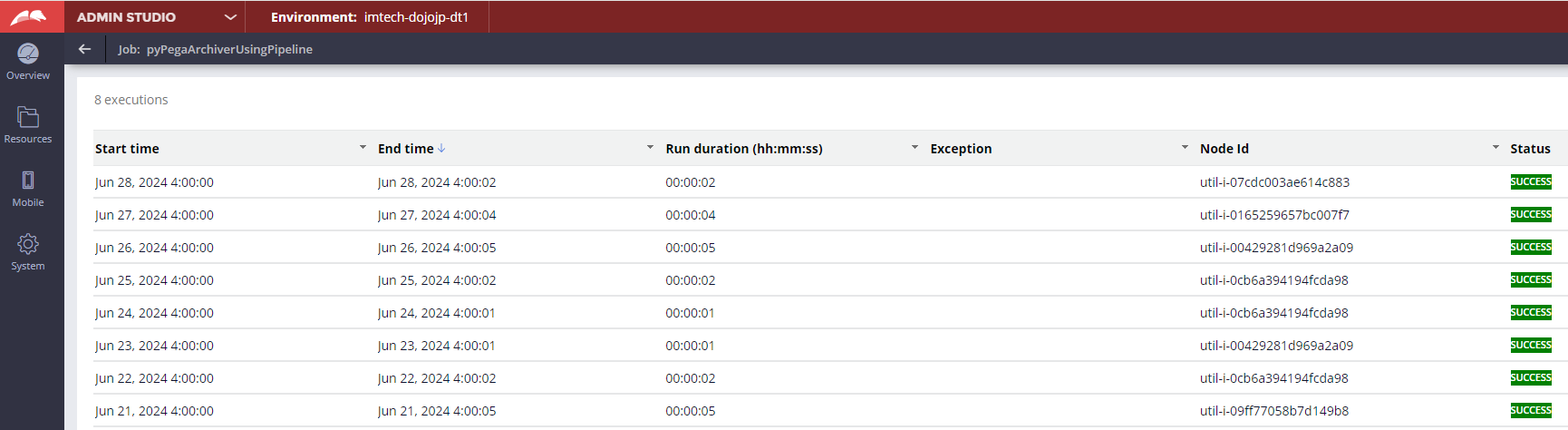

Validate 1 - Go to Admin Studio to verify that the job scheduler ran successfully.

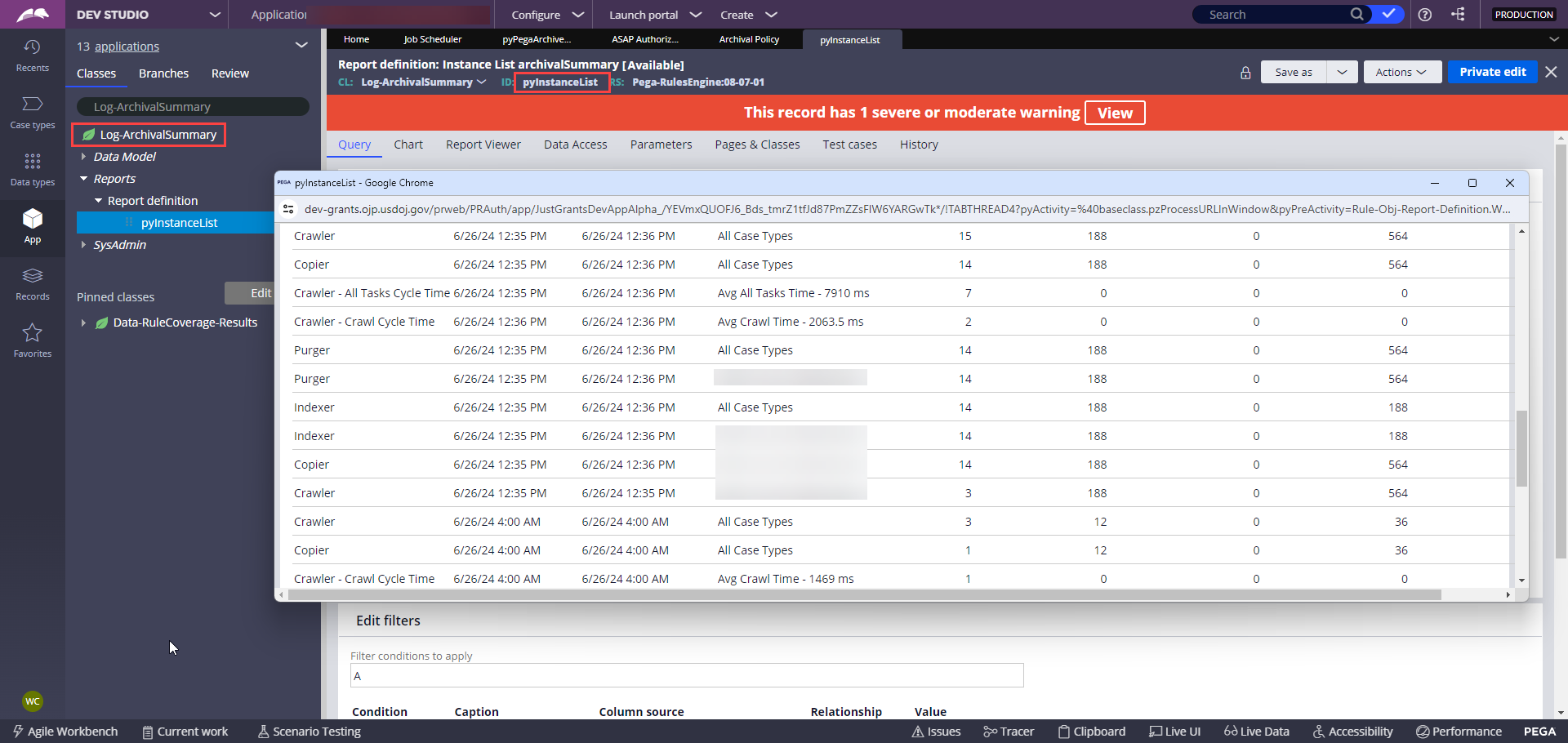

Validate 2 - Go to the Log-ArchivalSummary class and run the pyInstanceList report definition to verify.

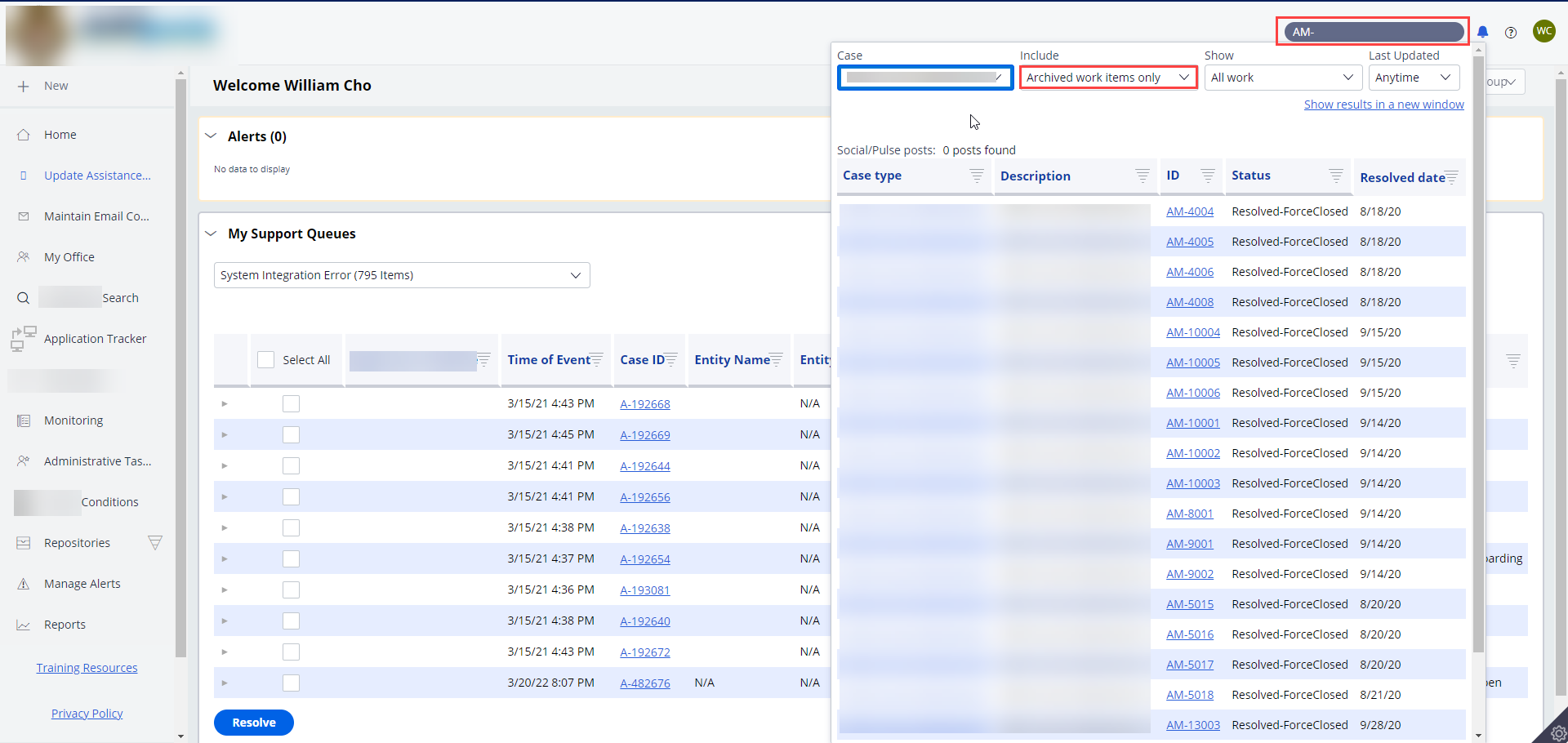

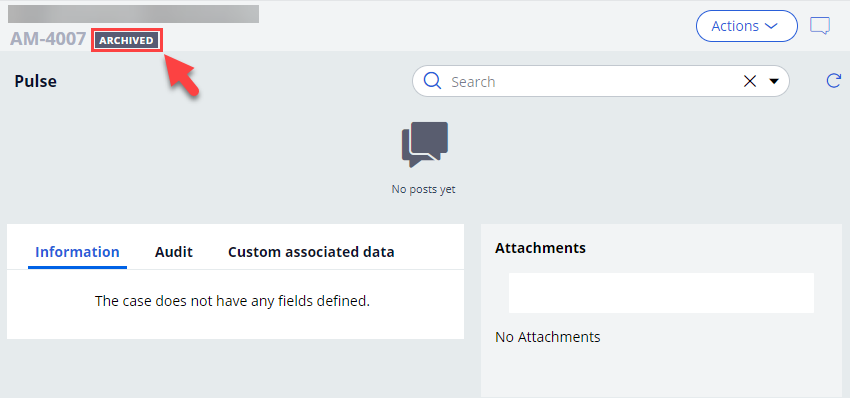

Validate 3 - Search the archived cases using the OOTB case search.

Select Archived work items only in the Include dropdown.

Open the archived case and verify the ARCHIVED status.

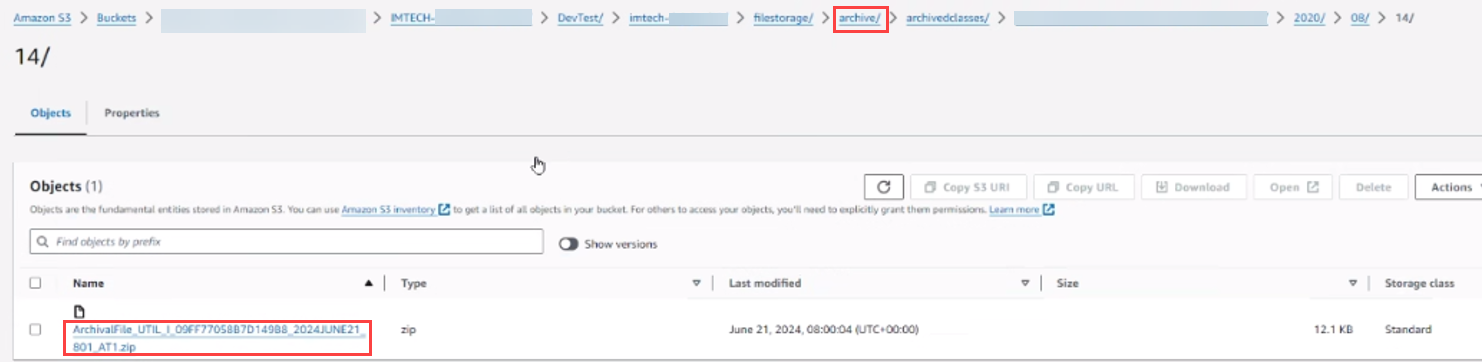

Validate 4 - Ask Pega Cloud administrator to verify that an archive sub-folder is created in the Pega Cloud File storage and contains the archived zip file.

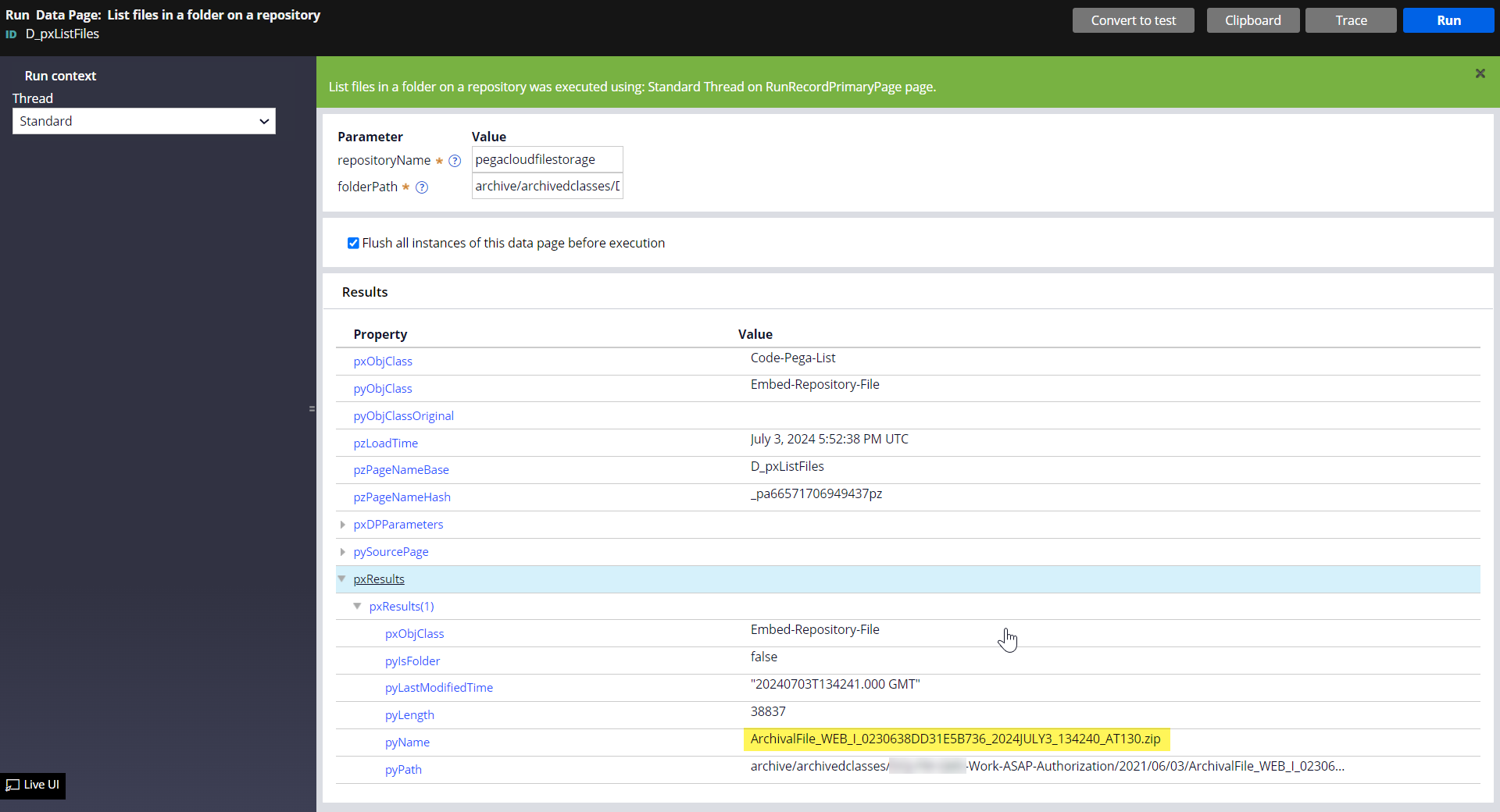

Alternatively, we can run a data page (D_pxListFiles) to verify that the archived zip file is successfully created in the file storage.

- E.g. parameters:

- repositoryName="pegacloudfilestorage"

- folderPath="archive/archivedclasses/XXXXX-Work-ASAP-Authorization/2021/06/03/"

Archiving cases with custom resolution status

By default, Pega archives resolved cases with 'Resolved-*' status only. But sometimes, client may have resolved cases with a custom resolution status that is different from 'Resolved-*'. The OOTB behavior can be customized to archive cases with a different resolution status.

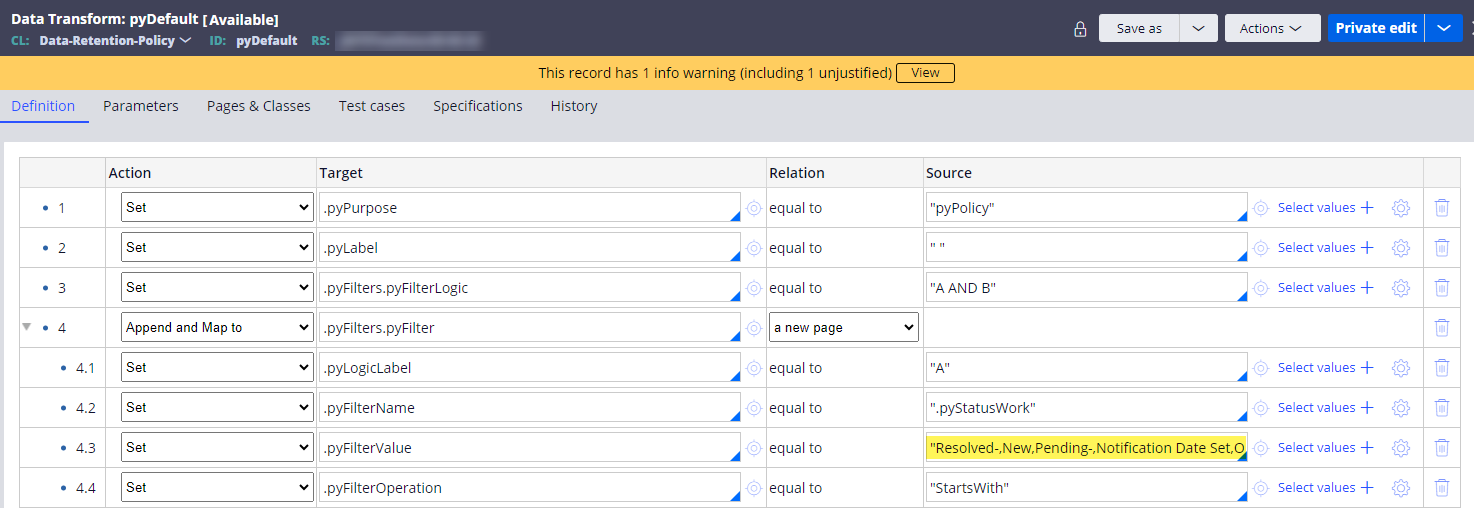

To accomplish this, save the following OOTB pyDefault data transform to your application ruleset and update the value of .pyFilterValue property.

In our example, we set it to:

.pyFilterValue = "Resolved-,New,Pending-,Notification Date Set,Open"Caveat: to change the resolution status for a Case Type that already has an archival policy, we have to delete the existing Data-Retention-Policy records for the Case Type (after customizing the pyDefault data transform above) and then configure the archival policy again.

Note: make sure that the case still has a valid .pyResolvedTimestamp value.

Read more on Configurating custom resolution status.

Additional notes

- The archival process also archives certain artifacts within a case, such as child cases, work history, attachments, pulse replies including link attachments, declarative indexes, etc. Refer to this Pega docs article for more information.

- One challenge of archiving old cases in lower environments (such as DEV, QA and Stage) is that many old cases are left unresolved, which is not eligible for archival. Cases must be first resolved before being archived. To achieve this, we wrote an activity using pxForceCaseClose activity API to bulk close all unresolved cases older than a timestamp (passed as a parameter) with pyStatusWork=Resolved-ForceClosed. Then, in the next archival job, they would be archived.

- When bulk closing a very large number of cases, consider executing the pxForceCaseClose activity using Queue-For-Processing in the background processing nodes (instead of web nodes used by front-end users). This can help to distribute the processing overhead across multiple nodes to increase the throughput and also avoid the requestor locking for the user.

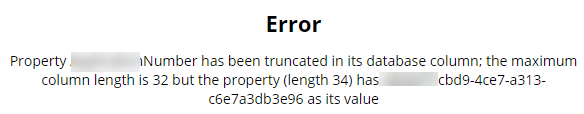

- When forcing to close an old case, the case may fail to resolve due to error. One reason could be that the old case is not processing correctly in the latest rules. In our project, one case threw this error and won't force resolve. For such, we'll need to resolve case by case before the next archival job can archive it.

- To read Pega docs on case archiving and purging - click here

@Will Cho - To modify the JS settings, we need to Save As the OOTB Rule into an open Application RuleSet. This can mean either two things, the JS must be saved into a RuleSet common to every applications stack in your system so that archival works for every Application, or you can Save As per application and use the Access Group setting to set the "scope" of the Cases to include. Can you comment on these statements please?