Discussion

Accenture

AU

Last activity: 19 Sep 2023 5:36 EDT

Setting up Kafka and Integrating it with Pega using Stream Dataset , Event strategy and Dataflow

One of the key design patterns that I have come across while working with clients in the last couple of years is the requirement of

- Ingesting data from data streams

- Detecting patterns and Insights

- Actioning on the Insight if needed

Well, the solution which I have often recommended in these scenarios was to use the powerful combination of “Stream Dataset”, “Dataflow” and “Event Strategy” specifically when the volume of data is high.

The initial use was mostly for “Pega Marketing”/” Customer Decision Hub” clients but it’s also proved quite popular for “Process AI”.

Well, it’s good to propose this pattern but I firmly believe that to get comfortable with any pattern it makes sense to try it out in a local instance and hence this article

In this article I’ll document the steps to for the following (for Win 10)

- Setup a Local Kafka Instance

- Connect it to Pega using a stream Dataset

- Using an Event strategy to detect patterns

- Bring it all together using a Dataflow

Setting up Kafka

There are quite a few articles on this some of them out of date, so I’ll keep it very simple and describe the process for the latest version of Kafka

- Go To https://kafka.apache.org/downloads

- Download the Latest Binary Version (I used 2.13)

- Unzip/Extract using your favorite unzip utility. (I did it in “C:\Apps\Kafka”)

- Create the data, logs folders and kafka, zookeeper sub folders. So, I created C:\Apps\Kafka\logs, C:\Apps\Kafka\data, C:\Apps\Kafka\data\kafka and C:\Apps\Kafka\data\zookeeper

- Go to the config folder and open zookeeper.properties file and then set dataDir, for me it was “dataDir=C:/Apps/Kafka/data/zookeeper”

- From the same config folder open server.properties and set log.dirs, for me it was “log.dirs=C:/Apps/Kafka/logs”

- Go back to the kafka root folder (C:\Apps\Kafka) and create a batch file to start and stop kafka and zookeeper (you can use command line as well). I just created the batch files since it makes things easy to start and stop

rem startkafka.bat cd %cd%\bin\windows kafka-server-start.bat ../../config/server.propertiesrem startzookeeper.bat cd %cd%\bin\windows zookeeper-server-start.bat ../../config/zookeeper.propertiesrem stopkafka.bat cd %cd%\bin\windows kafka-server-stop.batrem stopzookeeper.bat cd %cd%\bin\windows zookeeper-server-stop.batStart Zookeeper first using the batch file/command prompt and then once it’s running then start kafka

Now that everything is up and running time to create a Kafka Topic and post some messages

- Once both are up and running open another command prompt and navigate to the kafka bin folder, for me it was “C:\Apps\Kafka\bin\windows “

- Create a topic using the command

kafka-console-producer.bat --topic DeviseAlerts --bootstrap-server localhost:9092Here DeviseAlerts is the Topic name and localhost:9092 is the host and port

-

Connect to the topic as a producer

kafka-console-producer.bat --topic quickstart-events --bootstrap-server localhost:9092post some message , sample I used is given below , you can post anything preferably JSON since pega works ootb with JSON

{ "devisename": "SmartMeter", "deviseid": "SM0824832049832", "alarm": { "alarmid": "HP398432", "alarmtype": "tamper", "threshold": 200.78, "reading": 239, "date": "2022-08-12T03:40:49.453Z" } }

Next Connect to the topic using a stream Dataset

This is quite simple

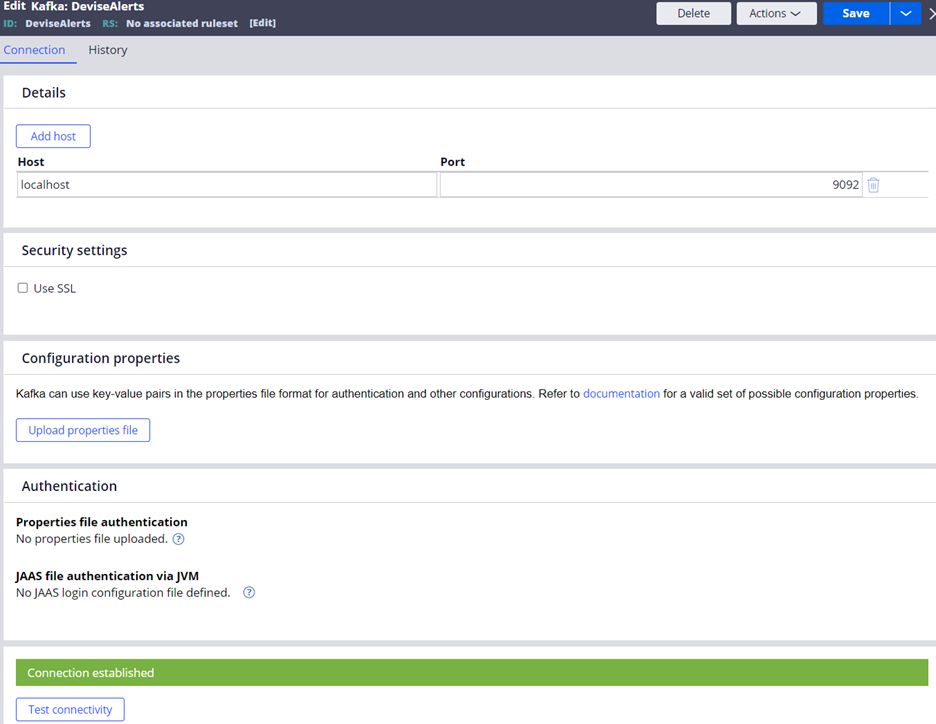

- First create a Kafka Configuration instance, Records --> SysAdmin --> Kafka , fill in the details as below and click on test connectivity

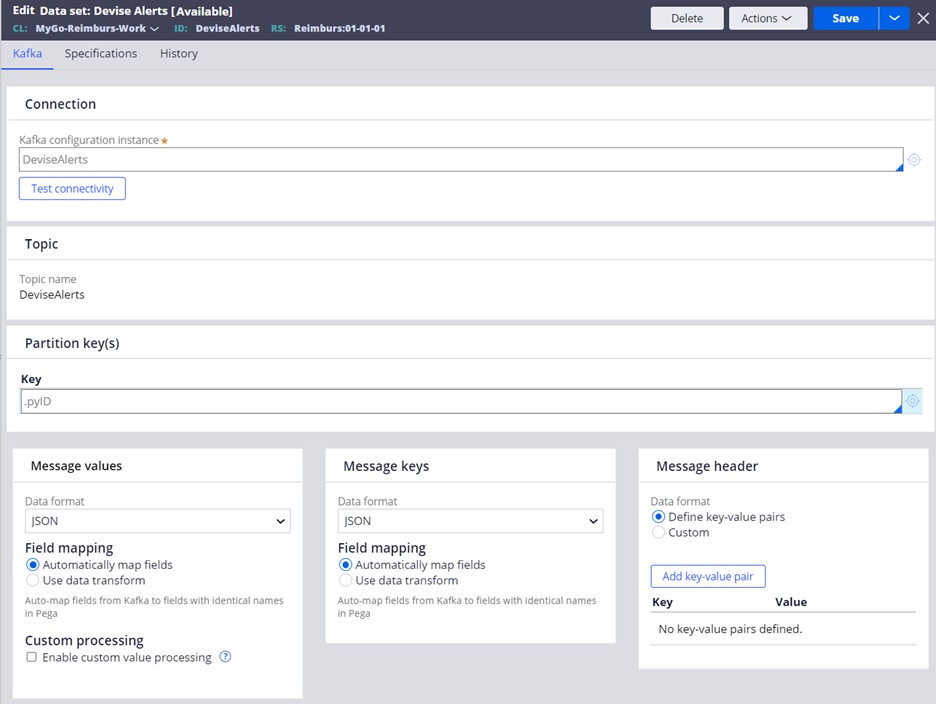

- Then create a Data Set, Records --> Data Model --> Data Set , You can set the name to be the same as the name of the Topic and type is Kafka . So for me the name was DeviseAlerts

- Run the dataset and view the results

That's it all done with the connectivity , optionally use a data transform to map the json to properties in the clipboard

Now create a Event strategy

This again is really simple

- Create an instance of "Event Strategy" (Decision --> Event strategy)

Finally Create the data flow

This too is really simple

- Create an instance of "Data Flow" (Data Model --> Data Flow)

Now that everything is done we need to run the dataflow , but before that set up Pega for stream processing

- Stop the Pega personal Edition instance

- Go to tomcat\bin folder for personal edition and open setenv.bat , update -DNodeType to the following

-DNodeType=Search,WebUser,BackgroundProcessing,Batch,RealTime,Stream - Restart personal edition , this would enable Batch and Real time processing , you can check this from Decisioning: Services landing page

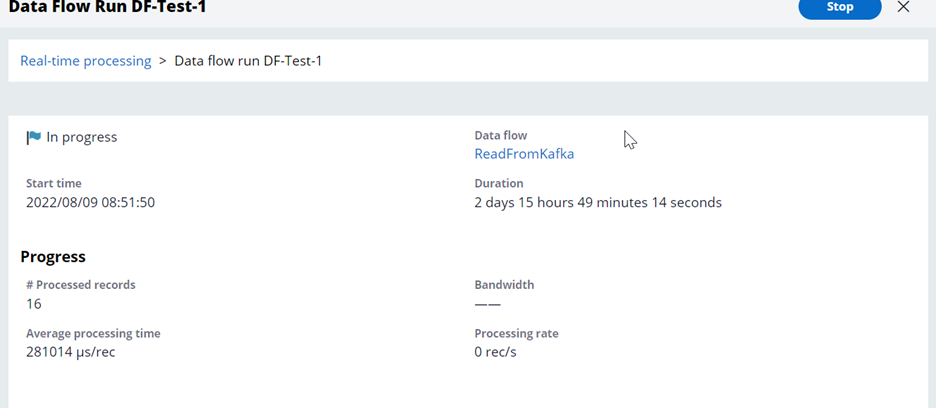

Finally open the dataflow and run , this would create a Dataflow Run like the one below . and that's it all done

Any messages that ae now sent to the Kafka topic will be processed by the data flow and if the condition given in the Event Strategy is true (in my case it's true when more than 5 events come from a devise in 1 hour window) then a case is created to investigate the alarm .