Question

Carelon Global Solutions

IN

Last activity: 13 Sep 2022 4:21 EDT

Best Approach to copy large amount of data from one table to other table.

Hi All,

I have a requirement to copy all the records matching my criteria (approximately 5Crore) from Table 'A' to Table 'B' and delete the same from Table 'A'. Looking for some better approaches to do this without any performance impact.

Note: Table A and B are internal pega tables.

Thanks in Advance!.

***Edited by Moderator Marije to change type from Pega Academy to Product***

-

Like (0)

-

Share this page Facebook Twitter LinkedIn Email Copying... Copied!

Accepted Solution

Updated: 13 Sep 2022 4:21 EDT

Carelon Global Solutions

IN

@m.caldagThanks for the reply. We have configured job scheduler with custom logic.

Bentego

TR

Dear @DivyaTejaswiP

I would like to understand the goal what will be the value when you copy 50 million records from table A to table B in the same Pega DB?

Pega Purge/Archive tool may help you if you are willing to export and delete resolved older cases from the table but in this case you need to import exported zip files to new table somehow.

Regards.

Updated: 25 Aug 2022 8:53 EDT

Carelon Global Solutions

IN

@m.caldagHi , Thanks for the Reply. Table A is mapped to multiple classes(5) to store the data as history initially. Now we are creating new table for each class. So we need to copy the existing records of specific class from Table A to the new table created respectively. Going forward records will be saved to the respective table mapped to that class

Bentego

TR

Hi @DivyaTejaswiP, Clear. I have an idea. I will explain you by giving solution in the next post.

-

Divya Tejaswi Potukuchi

Updated: 7 Sep 2022 4:11 EDT

Bentego

TR

Hi @DivyaTejaswiP,

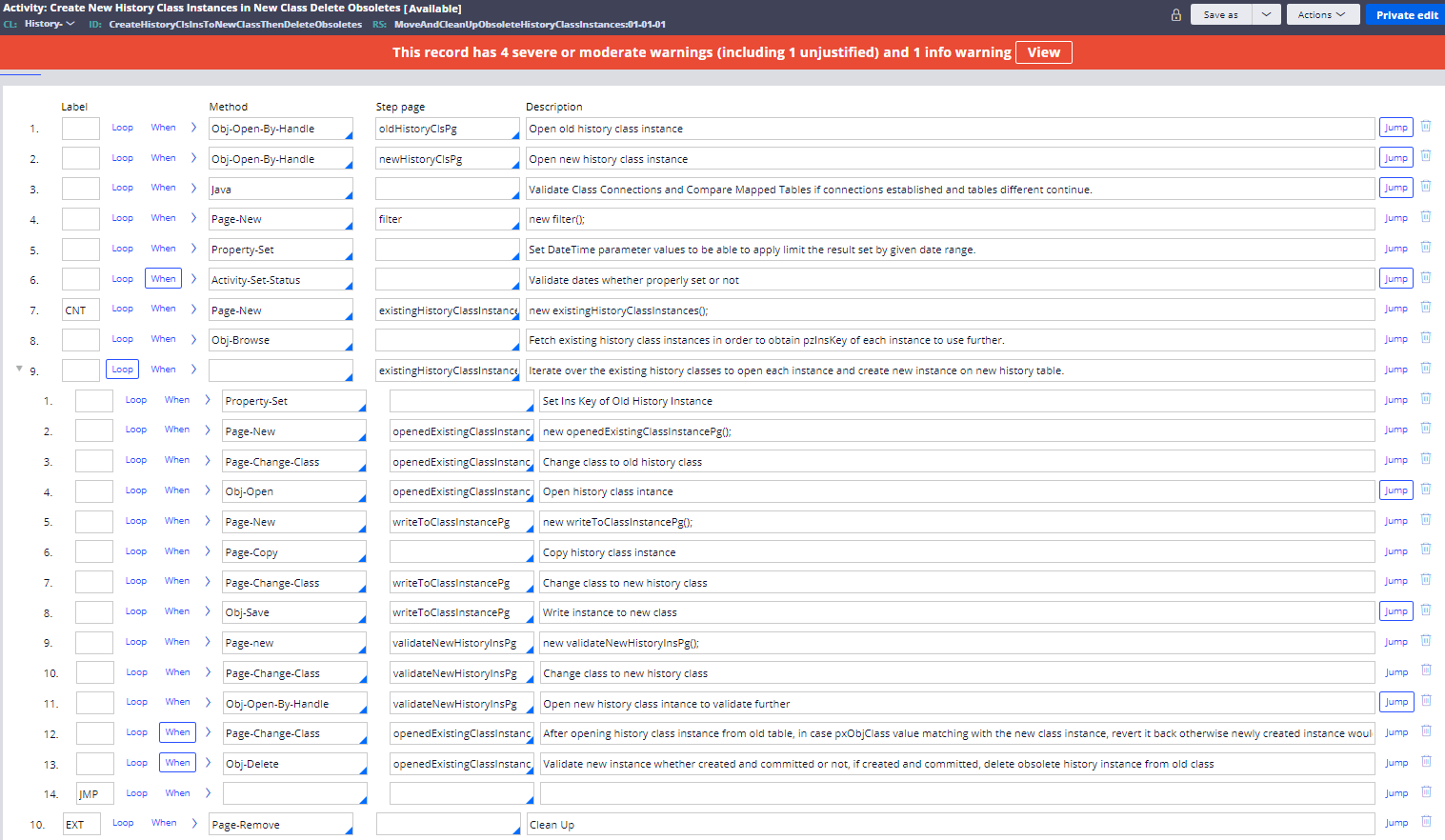

I've analyzed your problem statement and generated a solution attached. I am not claiming that this is the best approach but it is an approach that utilize Pega platform capabilities to provide a solution on your problem. Kindly import attached jar (product) in your PRPCPersonalEdition (8.7.#) cluster. You can associate the rulesets under attached product with DMSample application. After importing product rule to your personal environment, kindly examine the rules especially under MoveAndCleanUpObsoleteHistoryClassInstances ruleset then experiment to be able to fully understand solution then you may move or create your own Activity and Job Schedulers rules with benefiting from the rules under MoveAndCleanUpObsoleteHistoryClassInstances ruleset to your organization's development environment and go ahead further for upper environments.

Rules under MoveAndCleanUpObsoleteHistoryClassInstances ruleset are actually going to solve the issue. MoveAndCleanUpObsoleteHistoryClassInstances_UT ruleset includes some activities which are bulk creating dummy cases by given Class and their histories automatically, deleting cases and testing some date filter condition.

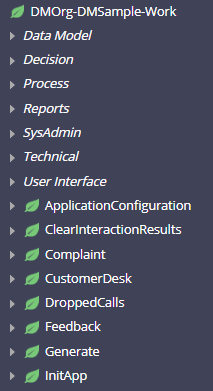

First of all, I predicted your scenario that you have a one class group for your application's cases like below.

Hi @DivyaTejaswiP,

I've analyzed your problem statement and generated a solution attached. I am not claiming that this is the best approach but it is an approach that utilize Pega platform capabilities to provide a solution on your problem. Kindly import attached jar (product) in your PRPCPersonalEdition (8.7.#) cluster. You can associate the rulesets under attached product with DMSample application. After importing product rule to your personal environment, kindly examine the rules especially under MoveAndCleanUpObsoleteHistoryClassInstances ruleset then experiment to be able to fully understand solution then you may move or create your own Activity and Job Schedulers rules with benefiting from the rules under MoveAndCleanUpObsoleteHistoryClassInstances ruleset to your organization's development environment and go ahead further for upper environments.

Rules under MoveAndCleanUpObsoleteHistoryClassInstances ruleset are actually going to solve the issue. MoveAndCleanUpObsoleteHistoryClassInstances_UT ruleset includes some activities which are bulk creating dummy cases by given Class and their histories automatically, deleting cases and testing some date filter condition.

First of all, I predicted your scenario that you have a one class group for your application's cases like below.

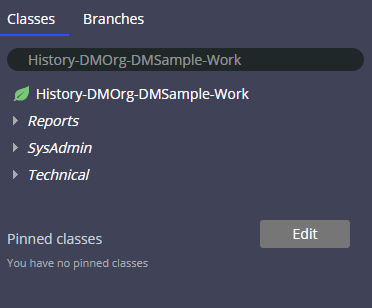

Those different case classes' instances are stored under separate database tables. On the other side, their history class instances are stored under one table because we have only one class group for their History like below.

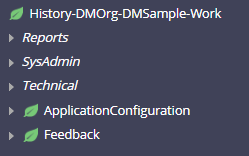

I think you created new class groups for the history instances of particular case which you are planning to store separately like below and they mapped to new database tables. I am assuming that newly created history database table, their indexes and other related stuff literally same with the existing History table which is storing history instances already.

Okay now let's focus to solution by following example what we are going to do is that we are going to move particular set of history class instances of let's say Feedback cases from database table which are associated with History-DMOrg-DMSample-Work to new database table which is mapped with History-DMOrg-DMSample-Work-Feedback class. We are going to leverage from the CreateHistoryClsInsToNewClassThenDeleteObsoletes activity.

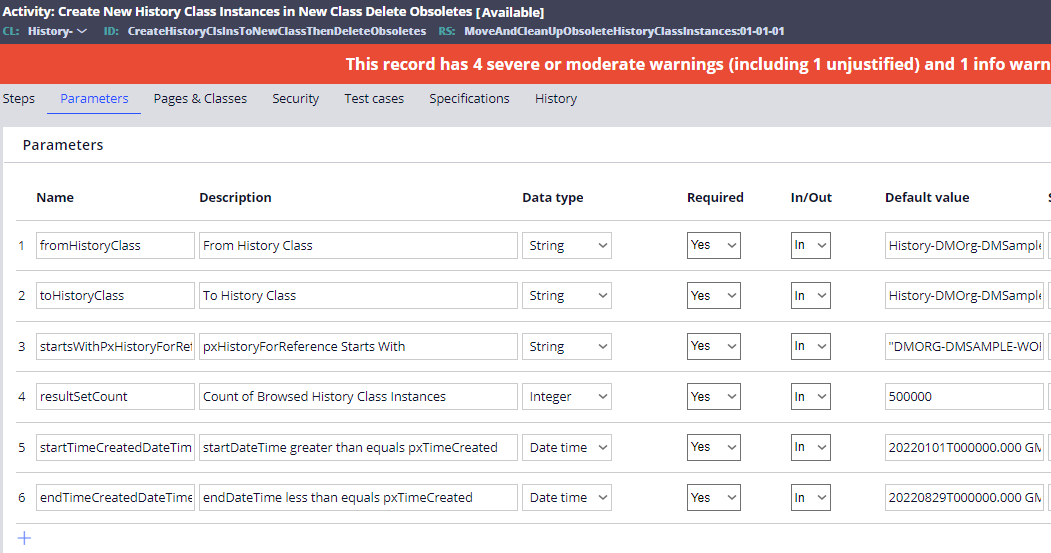

CreateHistoryClsInsToNewClassThenDeleteObsoletes activity is getting set of parameters.

fromHistoryClass parameter's value is a class which is storing existing instances.

toHistoryClass parameter's value is a class where are going to move history instances from old table.

startsWithPxHistoryForReference parameter's value is for filtering the history instances from the existing history table as it is storing other history instances of other cases.

resultSetCount parameter value is limiting the resultSet of existing history instances that they are going to be fetched through Obj-Browse method from the existing history table as we have millions records in that table based on your scenario.

startTimeCreatedDateTime parameter value is starting pxTimeCreated of history instances.

endTimeCreatedDateTime parameter value is end pxTimeCreated of history instances. We are filtering the query result set with the date range of pxTimeCreated attribute's value.

CreateHistoryClsInsToNewClassThenDeleteObsoletes activity is firstly testing the mapped tables connection and their state and validating whether mapped database tables are really different or not. It is then validating the datetime filters. Once validations done, it is getting the existing history instances by given filter condition eventually iterating over the result set and writing the instance to new table and validating newly created history instance whether really created or not and finally delete history instance from the old table.

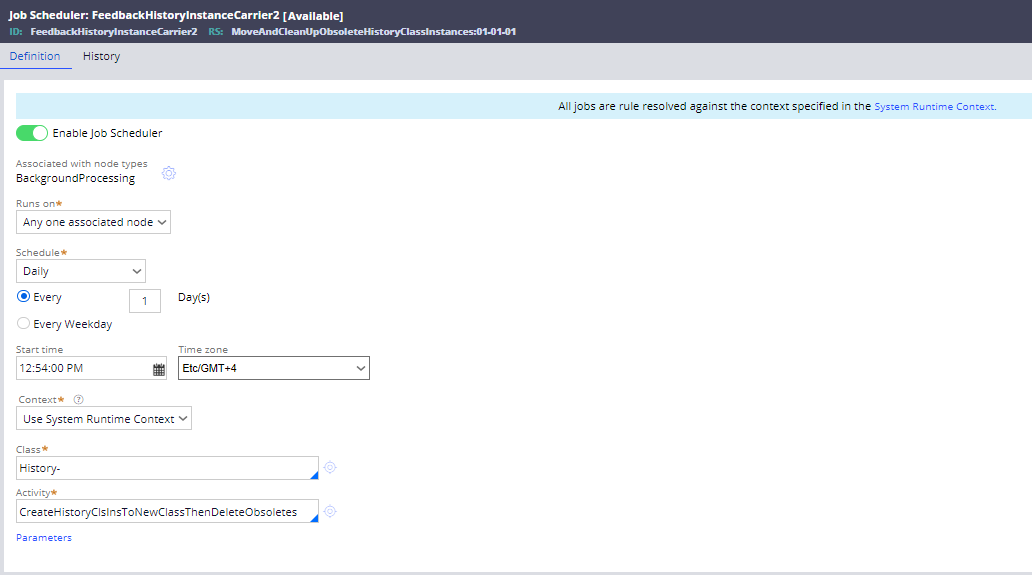

We should have a set of Job Scheduler rules (configure each one as any one associated BackGround processing node) which are going to associate with CreateHistoryClsInsToNewClassThenDeleteObsoletes activity rule by definitely with different parameters values especially date range. For instance, you can create 4 Job schedulers for year 2022 with giving the quarterly (three months) date range filter. I highly recommend you to write a Report Definition that is going to return count of history instances monthly and yearly. You can determine the count of Job Schedulers based on the light of retrieved group data as you have 50 million records. Job scheduler rules would be like example below.

Finally, test your changes with both manually executing activity for the some set of data and through Job Schedulers. I highly recommend you to simulate with creating large amount of history instances that are going to be moved or leverage existing large amount of history instances if any on pre-production if possible then before going production and starting executing, coordinate with DBA to get backup of the existing history instances.

Kindly try it and let me know the result whether it works or not. In case you need to discuss something else related with the subj, kindly join my Slack channel to discuss further.

Regards.

Mert.

-

Divya Tejaswi Potukuchi

Accepted Solution

Updated: 13 Sep 2022 4:21 EDT

Carelon Global Solutions

IN

@m.caldagThanks for the reply. We have configured job scheduler with custom logic.

-

Mert Caldag Venkat Ramana Dharmapuram

Bentego

TR

@DivyaTejaswiP

Perfect, It's good to know that.

Regards.

Mert.

TATA Consultancy Services

IN

@DivyaTejaswiP Rename the Table A as Table B and create table same structure as Table B in database .

Updated: 25 Aug 2022 4:37 EDT

Carelon Global Solutions

IN

@Nadimuthu T Hi, Thanks for the Reply. We will still need Table A as it has other data as well apart from what we need to copy and delete. Table B we created is to store the data of old cases of a specific class. Also going further class will mapped to Table B only. Pls suggest!

TATA Consultancy Services

IN

@DivyaTejaswiP Create Procedure and apply your copy logic using cursor method. Please make sure index to be applied for the when clause column.

-

Divya Tejaswi Potukuchi

Lventur

IN

You can make use of data flow to move the data from one table to another in more optimized way. Please check below mentioned articles to know how to implement this logic by using data flow.

Batch Data flow & Real-time Data flow in Pega - OneStopPega

What is Data Flow? How to use Data Flow in PEGA Platform? - OneStopPega

-

Divya Tejaswi Potukuchi

Carelon Global Solutions

IN

@Gunasekaran Baskaran , Thanks for the Reply. Will check on this

Updated: 29 Aug 2022 7:59 EDT

Ernst & Young LLP

MT

What I understood based on your replies to this thread -

- Currently you have bunch of history classes mapped to the same history table.

- This approach most likely led to performance issue in your app.

- You decided to create separate history tables for each of such classes.

Given that you are not building your app from scratch, and have already that table over populated with data, my suggestion would be not to have separate physical tables as that would requires to solve data migration challenges.

Instead you can use DB table partitioning approach[1] (based on history class names) which will have the same effect as dedicated separate table for each of such classes.

Note that ideally you should have separate history table for each (history) classes, but that should have been detected before production go-live and not after the table is populated with 5 core data.

DB table partitioning solution will act as a work around to your current problem.

Please check with your DBA if you are planning to follow this approach, and ensure that you test in a lower environment with similar data volume to verify if this solves your issue.

Thanks

[1] https://www.oracle.com/technetwork/database/options/partitioning/partitioning-wp-12c-1896137.pdf

-

Divya Tejaswi Potukuchi