Overview of the Scenario Testing features on the Pega platform

This post will cover all the high level capabilities and know limitations of the Scenario Testing feature of the Pega platform. The documentation covers information about Scenario Testing feature to learn more about it.

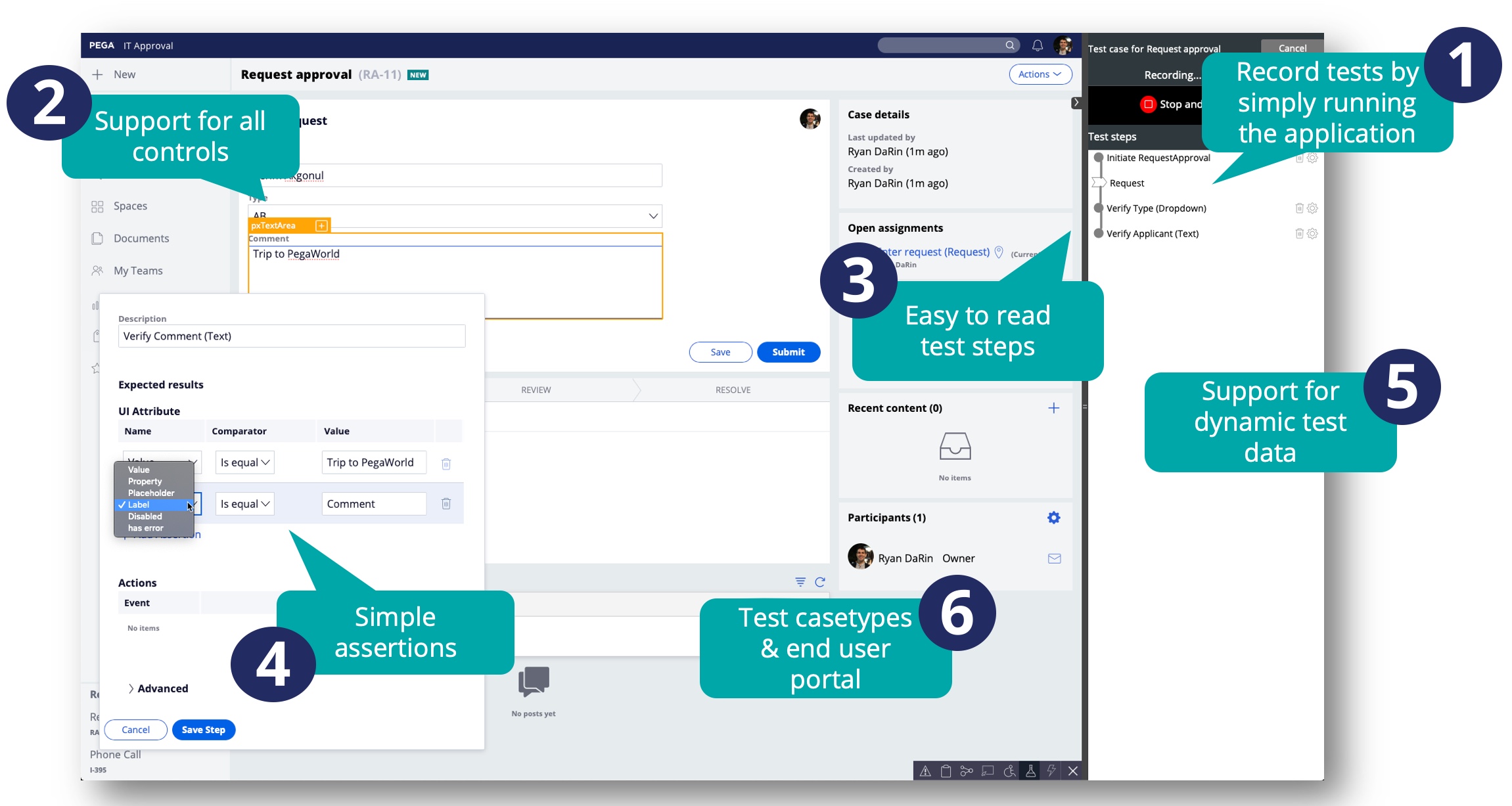

Scenario testing allows Pega application authors to create UI based end to end scenarios to test a Pega application. It has the following capabilities

- Record tests by simply running the application - Creating a test case is as simple as running the end user application. User input will be captured as the test data that will be used for subsequent test runs

- Support for all out of the box UI controls - All all the platform supplied UI controls are directly supported without any additional configuration

- Easy to read steps - The test case features simple easy to read steps that can be understood by all stakeholders as it simply describes all the necessary steps in the scenario.

- Simple validations - The validations are simple direct comparators of actual versus expected values

- Support for dynamic test data - Dynamic data can be supported for used for the expected user input value, or for the expected output value. Dynamic data can be specified through a predefined data page, D_pyScenarioTestData.

- Test casetypes and end user portals - Test cases can be created for both the end user portal after login, or it can be used to target individual casetypes.

Creating Scenario tests

Scenario tests can only effectively be captured in the context of the application portal. Scenario tests cannot be recorded from Dev Studio, App Studio or any of the development portals. However there are a few additional things to keep in mind

- Tests can only be captured through the Test recorder which is access through the run-time toolbar.

-

-

- The Create test case button allows you to create either a Portal or a Casetype test.

- Portal level test allow for capturing user actions from the header and footer, left side menu and other navigation elements

- Case level tests captures all the user interactions starting from the new screen for the casetype. All interactions outside of case will be ignored.

- After selecting the type of test, an orange selector will show up on hover over any supported UI control.

- Test case steps are captured on every user interaction with that has the orange selector.

- Add explicit validations by clicking on the "+" within the orange selector. An overlay will be displayed with options for additional assertions.

- Once the recording is done click on Stop and Save to create the test case.

Running Scenario Tests from Deployment Manager

Running scenario tests from Deployment Manager requires the use of a Selenium runner. There are various test services out there such as CrossBrowserTesting, BrowserStack or SauceLabs. You can also use a stand alone Selenium runner such as Selenium server or Selenium Grid.

Please refer to the Deployment Manager help on running Scenario Tasks.

How to guides for running Scenario Tests

- Running Scenario Tests using BrowserStack

- Running Scenario Tests using Crossbrowser Testing

- Running Scenario Tests using Selenium Grid

You can also run Scenario Tests from other pipeline tools such as Jenkins, using the associated Pega API. For more information on how to use this API please follow the instructions here Pega RESTFul Api for remote execution of scenario tests.

Additional resources

For more information, click through to the following resources

- Platform help

- Developer Knowledge Share -- Scenario Testing: How to create, run and edit test cases

- There is a lot more in depth content covered here, so please refer to this as a good starting point.